tt-torch

NOTE: TT-Torch is deprecated. To work with PyTorch and the various features available in TT-Torch, please see the documentation for TT-XLA.

tt-torch is a PyTorch2.0 and torch-mlir based front-end for tt-mlir.

tt-torch uses venv to keep track of all dependencies. After compiling you can activate the venv by running from the project root directory:

source env/activate

The currently supported models can be found here. There is a brief demo showing how to use the compiler in demos/resnet/resnet50_demo.py

The general compile flow is:

- Pytorch model -> torch.compile which creates an fx graph

- Several compiler passes on the fx graph including consteval and dead code removal

- Conversion to torch-mlir -> torch-backend-mlir -> stableHLO through torch-mlir

- Conversion to TTIR -> TTNN -> flatbuffer through tt-mlir

- Creating executor with flatbuffer and passing back to user

- Copying inputs to device and executing flatbuffer through tt-mlir on each user invocation

In order to speed up model bring-up, users have the option of compiling models op-by-op. This allows in-parallel testing of the model since compilation does not stop at the first error. If enabled, see Controlling Compilation, after step 2, compilation stops and the fx graph is passed to the executor which is returned to the user. Upon execution, whenever a new, unique op is seen (based on op-type and shape on inputs), a new fx graph is created with just one operation, inputs and outputs. This small graph then proceeds through steps 3-4 and is executed in place.

Results of each unique op execution are stored in a json file to be later parsed into either a spreadsheet, or uploaded to a database.

Op-by-op execution is currently performed on the pytorch fx graph, we'll be adding support for op-by-op on the stableHLO graph soon to allow op-by-op bringup of onnx models.

The repository uses pre-commit, read more about it here.

Getting Started

NOTE: TT-Torch is deprecated. To work with PyTorch and the various features available in TT-Torch, please see the documentation for TT-XLA.

This document walks you through how to set up TT-Torch. TT-Torch is TT-Forge's front end for converting PyTorch models to different levels of Intermediate Representation (IR) all the way down to TTNN. This is the main Getting Started page. There are two additional Getting Started pages depending on what you want to do. They are all described here, with links provided to each.

The following topics are covered:

- Setup Options

- Configuring Hardware

- Installing a Wheel and Running an Example

- Other Setup Options

- Where to Go Next

NOTE: If you encounter issues, please request assistance on the TT-Torch Issues page.

Setup Options

TT-Torch can be used to run PyTorch models. Because TT-Torch is open source, you can also develop and add features to it. Setup instructions differ based on the task. You have the following options, listed in order of difficulty:

- Installing a Wheel and Running an Example - You should choose this option if you want to run models.

- Using a Docker Container to Run an Example - Choose this option if you want to keep the environment for running models separate from your existing environment.

- Building from Source - This option is best if you want to develop TT-Torch further. It's a more complex process you are unlikely to need if you want to stick with running a model.

Configuring Hardware

Before setup can happen, you must configure your hardware. You can skip this section if you already completed the configuration steps. Otherwise, this section of the walkthrough shows you how to do a quick setup using TT-Installer.

-

Configure your hardware with TT-Installer using the Quick Installation section here.

-

Reboot your machine.

-

Make sure hugepages is enabled:

sudo systemctl enable --now 'dev-hugepages\x2d1G.mount'

sudo systemctl enable --now tenstorrent-hugepages.service

-

Please ensure that after you run the TT-Installer script, after you complete reboot and set up hugepages, you activate the virtual environment it sets up -

source ~/.tenstorrent-venv/bin/activate. -

After your environment is running, to check that everything is configured, type the following:

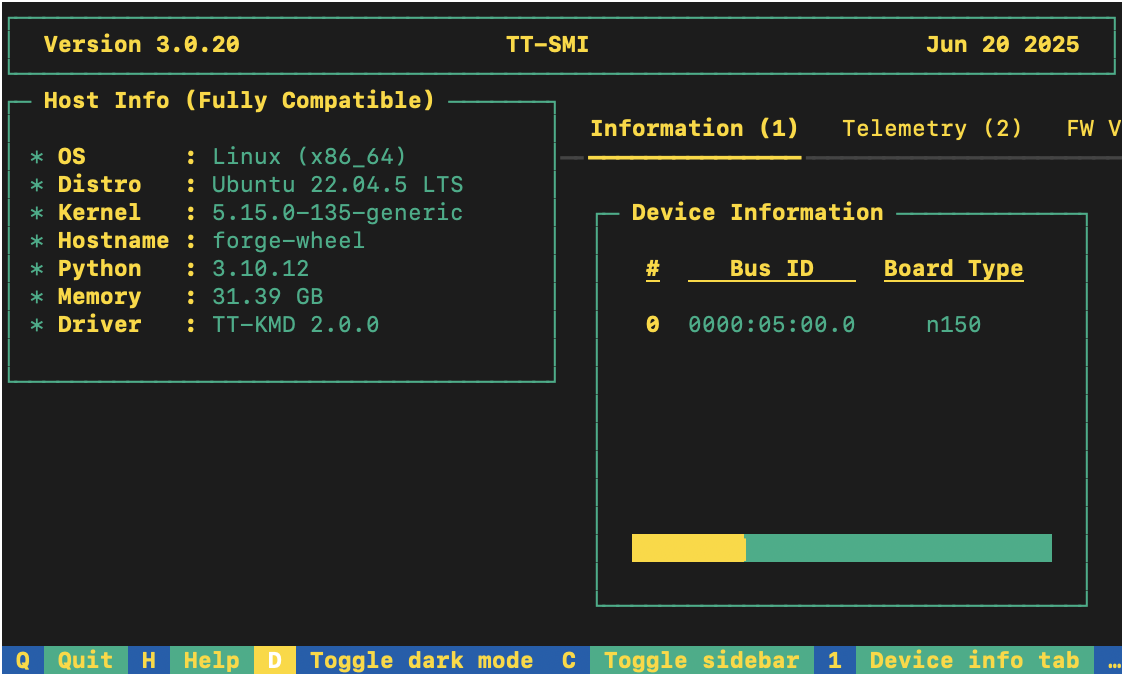

tt-smi

You should see the Tenstorrent System Management Interface. It allows you to view real-time stats, diagnostics, and health info about your Tenstorrent device.

Installing a Wheel and Running an Example

This section walks you through downloading and installing a wheel. You can install the wheel wherever you would like if it is for running a model. For this walkthrough, the resnet50_demo.py demo is used.

- Make sure you are in an active virtual environment. This walkthrough uses the same environment you activated to look at TT-SMI in the Configuring Hardware section. If you are using multiple TT-Forge front ends to run models, you may want to set up a separate virtual environment instead. For example:

python3 -m venv .tt-torch-venv

source .tt-torch-venv/bin/activate

- Download and install the wheel in your active virtual environment:

pip install tt-torch --pre --extra-index-url https://pypi.eng.aws.tenstorrent.com/

- Before you run a model, download and install the MPI implementation:

wget -q https://github.com/dmakoviichuk-tt/mpi-ulfm/releases/download/v5.0.7-ulfm/openmpi-ulfm_5.0.7-1_amd64.deb -O /tmp/openmpi-ulfm.deb && \

sudo apt install -y /tmp/openmpi-ulfm.deb

- Install the other packages you need for the demo:

pip install pillow

pip install tabulate

pip install requests

- Clone the TT-Forge repo:

git clone https://github.com/tenstorrent/tt-forge.git

-

Navigate into tt-forge/demos/tt-torch. You are now ready to try running a model.

-

Run the demo:

python resnet50_demo.py

If all goes well, you should get a list of top five predictions for what the example image is, with the top one being a cat.

Other Setup Options

If you want to keep your environment completely separate in a Docker container, or you want to develop TT-Torch further, this section links you to the pages with those options:

Where to Go Next

Now that you have set up the TT-Torch wheel, you can compile and run other demos. See the TT-Torch folder in the TT-Forge repo for other demos you can try.

Getting Started with Docker

NOTE: TT-Torch is deprecated. To work with PyTorch and the various features available in TT-Torch, please see the documentation for TT-XLA.

This document walks you through how to set up TT-Torch using a Docker image. There are two other available options for getting started:

- Installing a Wheel - if you do not want to use Docker, and prefer to use a virtual environment by itself instead, use this method.

- Building From Source - if you plan to develop TT-Torch further, you must build from source, and should use this method.

Configuring Hardware

Before setup can happen, you must configure your hardware. You can skip this section if you already completed the configuration steps. Otherwise, this section of the walkthrough shows you how to do a quick setup using TT-Installer.

-

Configure your hardware with TT-Installer using the Quick Installation section here.

-

Reboot your machine.

-

Make sure hugepages is enabled:

sudo systemctl enable --now 'dev-hugepages\x2d1G.mount'

sudo systemctl enable --now tenstorrent-hugepages.service

-

Please ensure that after you run the TT-Installer script, after you complete reboot and set up hugepages, you activate the virtual environment it sets up -

source ~/.tenstorrent-venv/bin/activate. -

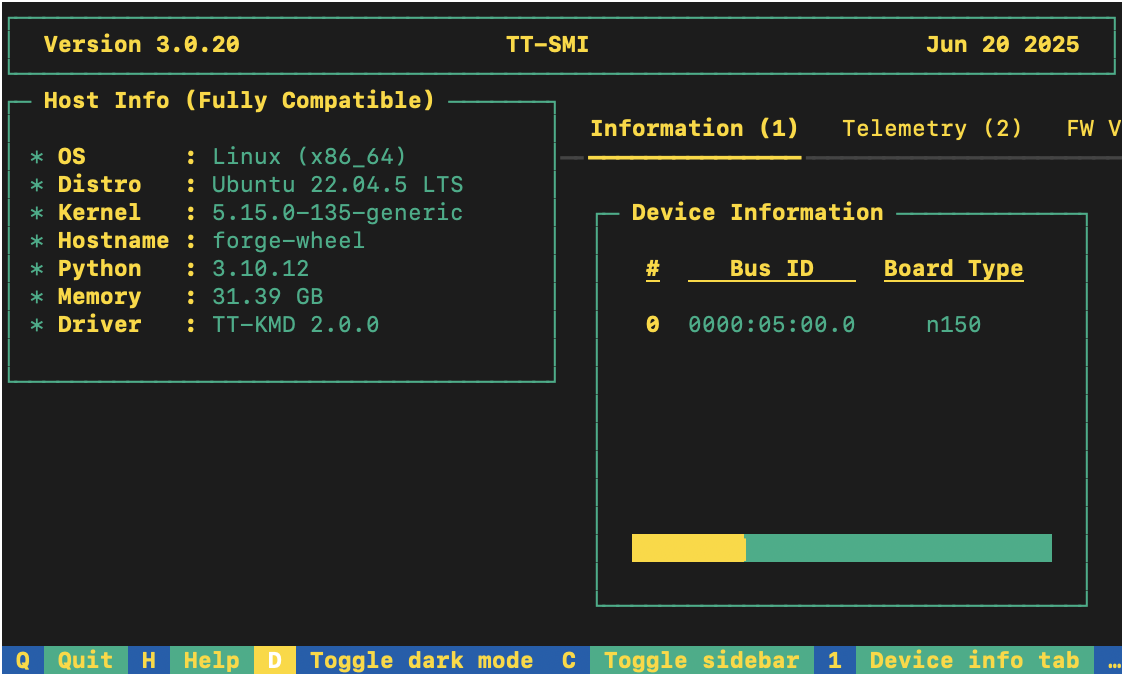

When your environment is running, to check that everything is configured, type the following:

tt-smi

You should see the Tenstorrent System Management Interface. It allows you to view real-time stats, diagnostics, and health info about your Tenstorrent device.

Setting up the Docker Container

This section walks through the installation steps for using a Docker container for your project.

To install, do the following:

- Install Docker if you do not already have it:

sudo apt update

sudo apt install docker.io -y

sudo systemctl start docker

sudo systemctl enable docker

- Test that Docker is installed:

docker --version

- Add your user to the Docker group:

sudo usermod -aG docker $USER

newgrp docker

- Run the Docker container:

docker run -it --rm \

--device /dev/tenstorrent \

-v /dev/hugepages-1G:/dev/hugepages-1G \

ghcr.io/tenstorrent/tt-torch-slim:latest

NOTE: You cannot isolate devices in containers. You must pass through all devices even if you are only using one. You can do this by passing

--device /dev/tenstorrent. Do not try to pass--device /dev/tenstorrent/1or similar, as this type of device-in-container isolation will result in fatal errors later on during execution.

- If you want to check that it is running, open a new tab with the Same Command option and run the following:

docker ps

If all goes well, you are now ready to move on to the next section, and run your first demo model.

Running Models in Docker

This section shows you how to run a model using Docker. The provided example is from the TT-Forge repo. Do the following:

- Inside your running Docker container, clone the TT-Forge repo:

git clone https://github.com/tenstorrent/tt-forge.git

- Set the path for Python:

export PYTHONPATH=/tt-forge:$PYTHONPATH

- Navigate into TT-Forge and run the following command:

git submodule update --init --recursive

- For this set up, the resnet50_demo.py model is used. It requires additional dependencies be installed. Install the following:

pip install pillow

pip install tabulate

pip install requests

- Navigate into tt-forge/demos/tt-torch and run the model:

python resnet50_demo.py

If all goes well, you should get a list of top five predictions for what the example image is, with the top one being a cat.

Where to Go Next

Now that you have set up TT-Forge-FE, you can compile and run your own models, or continue trying demos from the TT-Torch folder in the TT-Forge repo.

Getting Started

NOTE: TT-Torch is deprecated. To work with PyTorch and the various features available in TT-Torch, please see the documentation for TT-XLA.

This document describes how to build the TT-Torch project on your local machine. You must build from source if you want to develop for TT-Torch. If you only want to run models, please choose one of the following sets of instructions instead:

- Installing a Wheel and Running an Example - You should choose this option if you want to run models.

- Using a Docker Container to Run an Example - Choose this option if you want to keep the environment separate from your existing environment.

The following topics are covered:

NOTE: If you encounter issues, please request assistance on the TT-Torch Issues page.

Configuring Hardware

Before setup can happen, you must configure your hardware. You can skip this section if you already completed the configuration steps. Otherwise, this section of the walkthrough shows you how to do a quick setup using TT-Installer.

-

Configure your hardware with TT-Installer using the Quick Installation section here.

-

Reboot your machine.

-

Please ensure that after you run this script, after you complete reboot, you activate the virtual environment it sets up -

source ~/.tenstorrent-venv/bin/activate. -

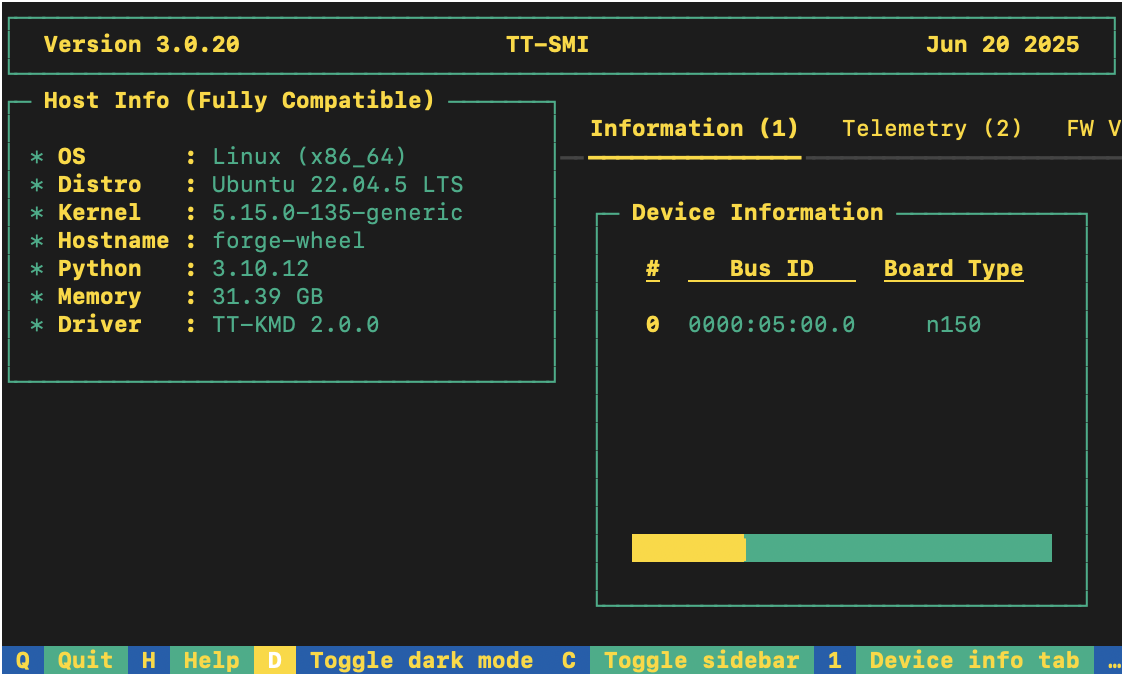

After your environment is running, to check that everything is configured, type the following:

tt-smi

You should see the Tenstorrent System Management Interface. It allows you to view real-time stats, diagnostics, and health info about your Tenstorrent device.

System Dependencies

TT-Torch has the following system dependencies:

- Ubuntu 22.04

- Python 3.11

- python3.11-venv

- Clang 17

- GCC 11

- Ninja

- CMake 4.0.3

Installing Python

If your system already has Python installed, make sure it is Python 3.11 or higher:

python3 --version

If not, install Python:

sudo apt install python3.11

Installing CMake 4.0.3

This section walks you through installing CMake 4 or higher.

- Install CMake 4.0.3:

pip install cmake==4.0.3

- Check that the correct version of CMake is installed:

cmake --version

If you see cmake version 4.0.3 you are ready for the next section.

Installing LLVM 17 Toolchain

This section walks you through installing LLVM 17 Toolchain.

- Download LLVM 17 Toolchain Installation Script:

wget https://apt.llvm.org/llvm.sh

chmod u+x llvm.sh

sudo ./llvm.sh 17

- Install C++ Standard Library (libc++) Development Files for LLVM 17:

sudo apt install -y libc++-17-dev libc++abi-17-dev

- Symlink Clang:

sudo ln -s /usr/bin/clang-17 /usr/bin/clang

sudo ln -s /usr/bin/clang++-17 /usr/bin/clang++

- Check that the selected GCC candidate using Clang 17 is using 11:

clang -v

- Look for the line that starts with:

Selected GCC installation:. If it is something other than GCC 11, and you do not see GCC 11 listed as an option, please install GCC 11 using:

sudo apt-get install gcc-11 lib32stdc++-11-dev lib32gcc-11-dev

- If you see GCC 12 listed as installed and listed as the default choice, uninstall it with:

sudo rm -rf /usr/bin/../lib/gcc/x86_64-linux-gnu/12

Installing Dependencies

Install additional dependencies required by TT-Torch that were not installed by the TT-Installer script:

sudo apt-get install -y \

libhwloc-dev \

libtbb-dev \

libcapstone-dev \

pkg-config \

linux-tools-generic \

ninja-build \

libgtest-dev \

ccache \

doxygen \

graphviz \

patchelf \

libyaml-cpp-dev \

libboost-all-dev \

lcov \

protobuf-compiler

Install OpenMPI:

sudo wget -q https://github.com/dmakoviichuk-tt/mpi-ulfm/releases/download/v5.0.7-ulfm/openmpi-ulfm_5.0.7-1_amd64.deb -O /tmp/openmpi-ulfm.deb && sudo apt install /tmp/openmpi-ulfm.deb

NOTE: If you want to run a test which uses

cv2(i.e. YOLOv10), please install libgl.

How to Build From Source

This section describes how to build TT-Torch. You need to build TT-Torch whether you plan to do development work, or run models.

- Clone the TT-Torch repo:

git clone https://github.com/tenstorrent/tt-torch.git

cd tt-torch

- Create a toolchain directory and make the account you are using the owner:

sudo mkdir -p /opt/ttmlir-toolchain

sudo chown -R $USER /opt/ttmlir-toolchain

- Build the toolchain for TT-Torch (this build step only needs to be done once):

cd third_party

cmake -B toolchain -DBUILD_TOOLCHAIN=ON

cd -

NOTE: This step takes a long time to complete.

- Build TT-Torch:

source env/activate

cmake -G Ninja -B build

cmake --build build

cmake --install build

NOTE: It takes a while for everything to build.

After the build completes, you are ready to run a test model.

Running a Test Model

You can test your installation by running the resnet50_demo.py:

python demos/resnet/resnet50_demo.py

For additional tests and models, please follow Testing.

Testing

NOTE: TT-Torch is deprecated. To work with PyTorch and the various features available in TT-Torch, please see the documentation for TT-XLA.

This document walks you through how to test tt-torch after building from source or installing from wheel

Infrastructure

tt-torch uses pytest for all unit and model tests.

Unit Tests

- PyTorch unit tests are located under tests/torch

- Onnx unit tests are located under tests/onnx

You can check that everything is working with a basic unit test:

# If building tt-torch from source

pytest -svv tests/torch/test_basic.py

# If installing tt-torch from wheel

pip install pytest

curl -s https://raw.githubusercontent.com/tenstorrent/tt-torch/main/tests/torch/test_basic.py -o test_basic.py

pytest -svv test_basic.py

NOTE: If you are using a multiple device setup, we encourage you to run the following tests:

tests/torch/test_basic_async.pytests/torch/test_basic_multichip.py

Running the resnet Demo

You can also try a demo:

# If building tt-torch from source

python demos/resnet/resnet50_demo.py

# If installing tt-torch from wheel

pip install pytest

curl -s https://raw.githubusercontent.com/tenstorrent/tt-torch/main/demos/resnet/resnet50_demo.py -o resnet50_demo.py

python resnet50_demo.py

Compiling and Running a Model

Once you have your torch.nn.Module compile the model:

import torch

import tt_torch

class MyModel(torch.nn.Module):

def __init__(self):

...

def forward(self, ...):

...

model = MyModel()

model = torch.compile(model, backend="tt-legacy")

inputs = ...

outputs = model(inputs)

Example - Add Two Tensors

Here is an example of a small model which adds its inputs running through tt-torch. Try it out!

import torch

import tt_torch

class AddTensors(torch.nn.Module):

def forward(self, x, y):

return x + y

model = AddTensors()

tt_model = torch.compile(model, backend="tt-legacy")

x = torch.ones(5, 5)

y = torch.ones(5, 5)

print(tt_model(x, y))

Testing With Experimental Flow

We are experimenting with torch-xla as a method of capturing and executing PyTorch models. We plan to eventually use torch-xla as the main execution engine for tt-torch.

Experimental torch.compile Backend ("tt-experimental")

import torch

import tt_torch

class AddTensors(torch.nn.Module):

def forward(self, x, y):

return x + y

model = AddTensors()

tt_model = torch.compile(model, backend="tt-experimental")

x = torch.ones(5, 5)

y = torch.ones(5, 5)

print(tt_model(x, y))

Experimental Eager Execution

torch-xla allows us to execute PyTorch models eagerly using the .to(device) infrastructure.

import torch

import tt_torch

class AddTensors(torch.nn.Module):

def forward(self, x, y):

return x + y

model = AddTensors()

model = model.to("xla")

x = torch.ones(5, 5, device="xla")

y = torch.ones(5, 5, device="xla")

print(model(x, y).to("cpu"))

Model Zoo

You can view our model zoo under tests/models

Please see overview for an explanation on how to control model tests.

You can always run pytest --collect-only to view available pytest names under test file.

/userhome/tt-torch$ pytest --collect-only tests/models/resnet

====================================================================== test session starts =======================================================================

platform linux -- Python 3.10.12, pytest-8.4.0, pluggy-1.6.0

rootdir: /userhome/tt-torch

configfile: pyproject.toml

plugins: cov-6.2.1

collected 12 items

<Dir tt-torch>

<Package tests>

<Dir models>

<Dir resnet>

<Module test_resnet.py>

<Function test_resnet[single_device-op_by_op_stablehlo-train]>

<Function test_resnet[single_device-op_by_op_stablehlo-eval]>

<Function test_resnet[single_device-op_by_op_torch-train]>

<Function test_resnet[single_device-op_by_op_torch-eval]>

<Function test_resnet[single_device-full-train]>

<Function test_resnet[single_device-full-eval]>

<Function test_resnet[data_parallel-op_by_op_stablehlo-train]>

<Function test_resnet[data_parallel-op_by_op_stablehlo-eval]>

<Function test_resnet[data_parallel-op_by_op_torch-train]>

<Function test_resnet[data_parallel-op_by_op_torch-eval]>

<Function test_resnet[data_parallel-full-train]>

<Function test_resnet[data_parallel-full-eval]>

Controlling Compiler Behavior

NOTE: TT-Torch is deprecated. To work with PyTorch and the various features available in TT-Torch, please see the documentation for TT-XLA.

You can use the following environment variables to override default behavior:

| Environment Variable | Behavior | Default |

|---|---|---|

| TT_TORCH_COMPILE_DEPTH | Sets the maximum compile depth, see tt_torch/tools/utils.py for options. | EXECUTE |

| TT_TORCH_VERIFY_OP_BY_OP | Sets whether to verify the output of each compiled op against pytorch when running with compile depth EXECUTE_OP_BY_OP. | False |

| TT_TORCH_VERIFY_INTERMEDIATES | Sets whether to verify runtime intermediates during execution. | False |

| TT_TORCH_CONSTEVAL | Enables evaluation of constant expressions (consteval) in the Torch FX graph prior to compilation. | False |

| TT_TORCH_CONSTEVAL_PARAMETERS | Extends consteval to include parameters (e.g., model weights) as well as embedded constants. | False |

| TT_TORCH_INLINE_PARAMETERS | Inlines parameters in the MLIR module (and thus flatbuffer executable) rather than requiring them as inputs. NOTE: The maximum size of a flatbuffer is 2GB so this will cause compilation to fail for sufficiently large models | False |

| TT_TORCH_IR_LOG_LEVEL | Enables printing MLIR from Torch to TTNN. It supports two modes; INFO and DEBUG. INFO prints MLIR for all conversions steps (Torch, StableHLO, TTIR and TTNN MLIR graphs). DEBUG prints intermediate MLIR for all passes (IR dump before and after each pass) additionally. Be warned, DEBUG IR printing forces single core compile, so it is much slower. | Disable |

Controlling Compiler Behavior Programatically

Instead of using the above environment variables, compiler behavior can be configured programatically as well.

Here is an example of enabling consteval:

from tt_torch.dynamo.backend import BackendOptions

from tt_torch.tools.utils import CompilerConfig

import tt_torch

import torch

class MyModel(torch.nn.Module):

def __init__(self):

...

def foward(self, ...):

...

model = MyModel()

cc = CompilerConfig()

cc.enable_consteval = True

cc.consteval_parameters = True # This will enable constant folding on the parameters in addition to any constants

options = BackendOptions()

options.compiler_config = cc

model = torch.compile(model, backend="tt-legacy", options=options)

inputs = ...

outputs = model(inputs)

Pre-Commit

NOTE: TT-Torch is deprecated. To work with PyTorch and the various features available in TT-Torch, please see the documentation for TT-XLA.

Pre-Commit applies a Git hook to the local repository, ensuring linting is checked and applied on every git commit action. Install it from the root of the repository using:

source env/activate

pre-commit install

If you have already made commits before installing the pre-commit hooks, you can run the following to “catch up”:

pre-commit run --all-files

For more information visit pre-commit

Profiling

Introduction

tt-torch uses the tt-metal Tracy fork to collect profiling data. Tracy is a single process profiler, and uses a client-server model to trace both host calls and on-device operation performance. tt-torch implements a wrapper called tt_profile.py with custom orchestration logic to handle the spawning of the Tracy capture server and the client workload to be profiled, as well as report generation and data postprocessing functionality.

The output of tt_profile.py is a CSV report displaying a table of operations executed on device and rich timing, memory usage and configuration data associated with them.

Note: Paths in this document are given relative to the repo root.

Prerequisites

NOTE: TT-Torch is deprecated. To work with PyTorch and the various features available in TT-Torch, please see the documentation for TT-XLA.

In the tt-torch building step (Getting Started), it is required to configure your cmake build with the additional cmake directive TT_RUNTIME_ENABLE_PERF_TRACE=ON (i.e. run: cmake -G Ninja -B build -DTT_RUNTIME_ENABLE_PERF_TRACE=ON).

Usage

The tt_profile.py tool is the recommended entrypoint for profiling workloads in tt-torch.

tt_profile.py [-h] [-o OUTPUT_PATH] [-p PORT] "test_command"

Note: The test_command must be quoted!

As a minimal example, the following command will run and tt_profile the MNIST test:

python tt_torch/tools/tt_profile.py "pytest -svv tests/models/mnist/test_mnist.py::test_mnist_train[single_device-full-eval]"

The report is created at results/perf/device_ops_perf_trace.csv by default, unless an output path is specified.

If tt-torch is installed using a wheel, the tt_profile command will be registered in the environment in which tt-torch is installed. Users can simply run tt_profile "<pytest or other command>" without providing a path to the profiler source file.

Limitations

- Tracy is a single process profiler and will not work with multiprocessed workflows. This includes tests parameterized by

op_by_op_shloandop_by_op_torch, which break down a model into individual ops and run them serially in separate processes. - To view traces, you can use

install/tt-metal/generated/profiler/.logs/tracy_profile_log_host.tracy.- This is a

.tracyfile that can be consumed by the tt-metal Tracy GUI and produce visual profiling traces of host and device activity. - You must use the tt-metal Tracy GUI to view this file. Refer to the GUI section in the tt-metal profiling documentation. Other sections are not applicable to tt-torch profiling.

- This is a

Troubleshooting

tt-torch/install/tt-metal/tools/profiler/bin/capture-release -o tracy_profile_log_host.tracy -f -p 8086' timed out after X seconds- Tracy uses a client-server model to communicate profiling data between the Tracy capture server and the client being profiled.

- Communication between client and server is done on a given port (default: 8086) as specified with the

-poption. - If there are multiple tracy clients/server processes active at once or previous processes are left dangling, or other processes on host occupying port 8086, there may be contention and unexpected behaviour including capture server timeouts.

- This may be addressed by manually specifying an unused port with the -p option to

tt_profile.py.

How to add model tests?

NOTE: TT-Torch is deprecated. To work with PyTorch and the various features available in TT-Torch, please see the documentation for TT-XLA.

Requirements

Following the instructions on this page will show you how to build your environment and run a demo.

TT-Torch Backend in a Nutshell

ModelTester and OnnxModelTester

Our testing framework uses ModelTester, OnnxModelTester defined under tests/utils.py

ModelTester and OnnxModelTester are designed to facilitate the testing of PyTorch and ONNX models, respectively. These classes provide a structured framework for loading models, preparing inputs, running inference, and verifying the accuracy of the outputs.

ModelTester

The ModelTester class serves as a base class for testing PyTorch models. It handles common testing procedures and provides abstract methods that derived classes can implement for specific model loading and input preparation.

Derived classes must implement the following abstract methods:

_load_model(): This method should load the PyTorch model to be tested and return the model object._load_inputs(): This method should load or generate the input data for the model and return it. The input should be a Torch object._extract_outputs()(optional): This method should return a tuple of torch tensors based on the outputs ifModelTester_extract_outputsfails.

OnnxModelTester

The OnnxModelTester class inherits from ModelTester and extends it to specifically handle testing of ONNX models.

Derived classes must implement the following abstract methods:

_load_model(): This method should load the Onnx model to be tested and return the model object._load_inputs(): This method should load or generate the input data for the model and return it. The input should be a Torch object._extract_outputs()(optional): This method should return a tuple of torch tensors based on the outputs ifModelTester_extract_outputsfails.

Backend

Backends are described under tt_torch/dynamo/backend.py and tt_torch/onnx_compile/onnx_compile.py There are a few factors determining which backend to use:

class CompileDepth(Enum):

TORCH_FX = 1

STABLEHLO = 2

TTNN_IR = 3

COMPILE_OP_BY_OP = 4

EXECUTE_OP_BY_OP = 5

EXECUTE = 6

class OpByOpBackend(Enum):

TORCH = 1

STABLEHLO = 2

Backends for Torch Models:

- Op by Op Flows (

COMPILE_OP_BY_OP/EXECUTE_OP_BY_OP):OpByOpBackend=TORCH--> usesTorchExecutorOpByOpBackend=STABLEHLO--> usesStablehloExecutor

- Other Compile Depths:

- Only

OpByOpBackend=TORCHis allowed. - Uses

Executor

- Only

Backends for ONNX Models:

- Op by Op Flows (

COMPILE_OP_BY_OP/EXECUTE_OP_BY_OP): OnlyOpByOpBackend=STABLEHLOis allowed. UsesStablehloExecutor - Other Compile Depths:

Only

OpByOpBackend=STABLEHLOis allowed. UsesOnnxExecutor

Executor

TT-Torch provides a set of executor classes that handle different types of models (ONNX, PyTorch) and compilation strategies (full compilation, op-by-op, etc.). The executor classes form a hierarchy, with specialized executors for different scenarios.

Executor (Base)

├── OpByOpExecutor

│ ├── TorchExecutor

│ └── StablehloExecutor

└── OnnxExecutor

Executor,OnnxExecutorandOpByOpExecutorare defined under tt_torch/dynamo/executor.pyTorchExecutoris defined under tt_torch/dynamo/torch_backend.pyStablehloExecutoris defined under tt_torch/dynamo/shlo_backend.py

Executor (Base Class)

The Executor class is the foundation for all executor implementations. It provides the basic framework for:

- Managing model representations (PyTorch programs, etc.)

- Converting input types between different formats

- Handling constants and model parameters

- Executing compiled models via TT-MLIR

- Managing device resources

- Verifying execution results

Key methods:

__call__: Main entry point for executing the modelset_binary: Sets the compiled binary for executiontypecast_inputs: Converts inputs to hardware-supported typesregister_intermediate_callback: Sets up callbacks for runtime verification

OpByOpExecutor

OpByOpExecutor extends the base Executor to support operation-by-operation compilation and execution. This allows for:

- Detailed profiling of individual operations

- Verification of each operation's outputs

- Debugging specific operations that might fail

Key methods:

compile_op: Compiles a single operationrun_op: Executes a single compiled operation

TorchExecutor

TorchExecutor is specialized for handling PyTorch models in an op-by-op fashion. It:

- Processes PyTorch FX graph modules node by node

- Converts PyTorch operations to StableHLO

- Compares outputs with golden (PyTorch) outputs for verification

Key methods:

get_stable_hlo_graph: Converts a PyTorch operation to StableHLO IRrun_gm_op_by_op: Executes a graph module operation by operation

StablehloExecutor

StablehloExecutor specializes in executing models through the StableHLO IR. It can:

- Process ONNX models converted to StableHLO

- Process PyTorch models converted to StableHLO

- Execute individual StableHLO operations

Key methods:

add_program: Adds a PyTorch program to the executoradd_onnx_model_proto: Adds an ONNX model to the executorget_stable_hlo_graph: Prepares a StableHLO operation for compilationshlo_op_by_op: Executes StableHLO operations individually

OnnxExecutor

OnnxExecutor is designed for handling ONNX models. It can:

- Execute ONNX models using ONNX Runtime

- Execute ONNX models converted to TT-MLIR binaries

CompilerConfig

This class manages settings for running models on Tenstorrent devices. Key aspects include:

- Compilation Depth: Defines the level of the compilation pipeline to reach.

- Profiling: Enables the collection of performance data for individual operations.

- Verification: Controls various checks and validations during compilation.

- Environment Overrides: Allows configuration through environment variables. This is explained in detail under Controlling Compiler Behaviour

Please see tt_torch/tools/utils.py for detailed information.

How to Write a Test?

The following is an example test body:

# Insert SPDX licensing. Pre-commit will insert if it is missing

# SPDX-FileCopyrightText: (c) 2025 Tenstorrent AI ULC

#

# SPDX-License-Identifier: Apache-2.0

# some base imports that are required for all tests:

import torch

import pytest

import onnx # for Onnx Tests

from tests.utils import ModelTester # for PyTorch Tests

from tests.utils import OnnxModelTester # for Onnx Tests

from tt_torch.tools.utils import CompilerConfig, CompileDepth, OpByOpBackend

class ThisTester(ModelTester): # or class ThisTester(OnnxModelTester):

def _load_model(self):

model = ....

return model

def _load_inputs(self):

inputs = ...

return inputs

# you can pytest parameterize certain arguments. i.e. Mode, OpByOpBackend, Model Name

@pytest.mark.parametrize(

"mode",

["train", "eval"],

)

@pytest.mark.parametrize(

"model_name",

[

"model_name_0",

"model_name_1",

],

)

@pytest.mark.parametrize(

"op_by_op",

[OpByOpBackend.STABLEHLO, OpByOpBackend.TORCH, None],

ids=["op_by_op_stablehlo", "op_by_op_torch", "full"],

)

# For PyTorch Tests

def <test_name>(record_property, model_name, mode, op_by_op):

cc = CompilerConfig()

cc.enable_consteval = True

cc.consteval_parameters = True

if op_by_op:

cc.compile_depth = CompileDepth.EXECUTE_OP_BY_OP

if op_by_op == OpByOpBackend.STABLEHLO:

cc.op_by_op_backend = OpByOpBackend.STABLEHLO

tester = ThisTester(

model_name,

mode,

compiler_config=cc,

record_property_handle=record_property,

)

results = tester.test_model()

if mode == "eval":

# code to evaluate the output is as expected

tester.finalize()

# For Onnx Tests:

def <test_name>(record_property, model_name, mode, op_by_op):

cc = CompilerConfig()

if op_by_op:

cc.compile_depth = CompileDepth.EXECUTE_OP_BY_OP

cc.op_by_op_backend = OpByOpBackend.STABLEHLO

tester = ThisTester(

model_name,

mode,

compiler_config=cc,

record_property_handle=record_property,

model_group="red",

)

results = tester.test_model()

if mode == "eval":

# code to evaluate the output is as expected

tester.finalize()

You can find example tests under tests/models

Note: please make sure to distinguish Onnx tests by appending _onnx to test names. i.e. test_EfficientNet_onnx.py

Test Run Nodes

-

op-by-op flow: This will break down model into graphs and break down graphs into ops, compiling and executing unique (first seen occurrence) ops independently. Results are written to .json file and and optionally converted to XLS file for reporting, as post-processing step. The op-by-op flow is typically used for bringing up new models and debugging and you should start there, especially if the model is a new, untested architecture or your have reason to believe it will not work end-to-end out of the box. Engaged with

cc.compile_depth = CompileDepth.EXECUTE_OP_BY_OPin test, typically driven by pytest params[op_by_op_torch-eval]. -

full end-to-end flow: This is the typical compile + execute of the model that typically includes functional correctness checking. Engaged with

cc.compile_depth = CompileDepth.EXECUTEin test, typically driven by pytest params[full-eval].

Where to Add Tests on tt-torch GitHub CI?

If you're a Tenstorrent internal developer and have a new model that is either running fully/correctly or still needs some work (compiler support, runtime support, etc), it should be added to CI in the same PR you add the model. Below is guide for where to add it.

Case 1: The New Model Test Runs Correctly End-to-end

If you've tried it and it runs – great!

- Add it to run in "nightly full model execute list" in

.github/workflows/run-full-model-execution-tests-nightly.ymlwhile ideally balancing existing groups of tests. Example:

tests/models/Qwen/test_qwen2_casual_lm.py::test_qwen2_casual_lm[full-eval]

- Also add it to "weekly op-by-op-flow list" in

.github/workflows/run-op-by-op-flow-tests-weekly.ymlwhere we less frequently run tests that have all ops passing through toEXECUTEdepth in op-by-op flow. Example:

tests/models/Qwen/test_qwen2_casual_lm.py::test_qwen2_casual_lm[op_by_op_torch-eval]

Case 2: The New Model Test Runs End-to-end but Encounters a PCC/ATOL/Checker Error

This is okay, there is still value in running the model.

- Follow previous section instructions for adding it to "nightly full model execute" and "weekly op-by-op-flow list" but first open a GitHub issue (follow template and

models_pcc_issuelabel like the example below) to track the PCC/ATOL/Checker error, reference it in the test body so it can be tracked/debugged, and disable PCC/ATOL/Token checking as needed. Example:

# TODO Enable checking - https://github.com/tenstorrent/tt-torch/issues/490

assert_pcc=False,

assert_atol=False,

Case 3: The New Model Test Does Not Run Correctly End-to-end

No problem. If your end-to-end model hits a compiler failure (unsupported op, etc) or runtime assert of any kind, this is why the op-by-op flow exists. The op-by-op flow is designed to flag per-op compile/runtime failures (which are perfectly fine) but is expected to return overall passed status.

- Go ahead and run the op-by-op flow locally (or on CI) for your model, and if the pytest finishes without fatal errors, add it to the "nightly op-by-op flow list" (a new or existing group) in

.github/workflows/run-op-by-op-flow-tests-nightly.ymlwhere individual ops will be tracked/debugged and later promoted to "nightly full model execute list" once ready. Example:

tests/models/t5/test_t5.py::test_t5[op_by_op_torch-t5-large-eval]

-

It is helpful if you can run

python results/parse_op_by_op_results.py(will generateresults/models_op_per_op.xlsxfor all models you've recently run in op-by-op-flow) and include the XLS file in your PR. This XLS file contains op-by-op-flow results and is also generated in Nightly regression for all work-in-progress models in.github/workflows/run-op-by-op-flow-tests-nightly.yml. -

If your model is reported in

results/models_op_per_op.xlsxas being able to compile all ops successfully (ie. all ops can compile to status6: CONVERTED_TO_TTNN, but some hit runtime7: EXECUTEfailures) then it should also be added to "nightly e2e compile list" in.github/workflows/run-e2e-compile-tests.ymlwhich stops before executing the model viaTT_TORCH_COMPILE_DEPTH=TTNN_IR pytest ...

How to Load Test Files into/from Large File System (LFS)

We have set up access to a AWS S3 bucket to be able to load and access model related files for testing. We can load files into our S3 bucket and access them from the tester scripts. You will need access to S3 bucket portal to add files. If you don't have an AWS account or access to the S3 bucket please reach out to the tt-torch community leader. Then, depending on if the test is running on CI or locally we will be able to load the files from the CI/IRD LFS caches that automatically sync up with contents in S3 bucket.

Load Files into S3 Bucket

Access S3 bucket portal, if you don't have access to the S3 bucket please reach out to the tt-torch community leader, and load file from local dir. Please add files following this structure:

test_files

├── pytorch

│ ├── huggingface

│ │ ├── meta-llama

│ │ │ ├── Llama-3.1-70B

│ │ │ │ └── <hugginface files>

│ │ │ ├── Llama-2-7b-hf

│ │ │ │ └── <hugginface files>

│ │ │ └── ...

│ │ └── ...

│ ├── yolov10

│ │ └── yolov10.pt

│ └── ...

└── onnx

├── ViT

│ └── ViT.onnx

└── ...

Load Files from S3 Bucket

Once files is loaded into S3 bucket we can access the file using a helper function from the tt-forge-models repo:

def get_file(path):

from third_party.tt_forge_models.tools.utils import get_file

class ThisTester(ModelTester):

def _load_model(self):

file = get_file("test_files/pytorch/yoloyv10/yolov_10n.pt")

...

The path arg should be the full path of the file in the S3 bucket. DO NOT use the S3 URL

Loading Files Locally

Locally get_file(path) will pull files directly from an IRD LFS cache. The IRD LFS cache is set up to sync up with S3 bucket every 5-10 minutes. You will need to set the IRD_LF_CACHE environment variable to the appropriate address. Contact tt-torch community leader for IRD LF cache address.

File/s will be downloaded into a local cache so next time you want to access the same file we won't have to load from the IRD cache or download it again. The default location for the local cache is ~/.cache/lfcache/. If you want to redirect files to a custom cache path set the LOCAL_LF_CACHE env variable to the desired path.

If you can't be granted access to the IRD LF cache you have two options:

- We can use

path=urlto download files. DO NOT use the S3 URL. - We can use

path=<local_path>where local path is a relative path of the file in your local cache (~/.cache/lfcache/<local_path>orLOCAL_LF_CACHE/<local_path>).

Loading Files from CI

Once a file has been loaded into ther S3 bucket the CI's shared DOCKER_CACHE_DIR has been set up to sync up with the contents of the S3 bucket every hour. get_file() will fetch the file from the DOCKER_CACHE_DIR.

Supported Models

NOTE: TT-Torch is deprecated. To work with PyTorch and the various features available in TT-Torch, please see the documentation for TT-XLA.

The following models can be currently run through tt-torch as of Feb 3rd, 2025. Please note, there is a known bug causing incorrect output for some models. The PCC is displayed at the end of each test below. This issue will be addressed soon.

| Model Name | Variant | Pytest Command |

|---|---|---|

| Albert | Masked LM Base | tests/models/albert/test_albert_masked_lm.py::test_albert_masked_lm[single_device-full-albert/albert-base-v2-eval] |

| Masked LM Large | tests/models/albert/test_albert_masked_lm.py::test_albert_masked_lm[single_device-full-albert/albert-large-v2-eval] | |

| Masked LM XLarge | tests/models/albert/test_albert_masked_lm.py::test_albert_masked_lm[single_device-full-albert/albert-xlarge-v2-eval] | |

| Masked LM XXLarge | tests/models/albert/test_albert_masked_lm.py::test_albert_masked_lm[single_device-full-albert/albert-xxlarge-v2-eval] | |

| Sequence Classification Base | tests/models/albert/test_albert_sequence_classification.py::test_albert_sequence_classification[single_device-full-textattack/albert-base-v2-imdb-eval] | |

| Token Classification Base | tests/models/albert/test_albert_token_classification.py::test_albert_token_classification[single_device-full-albert/albert-base-v2-eval] | |

| Autoencoder | (linear) | tests/models/autoencoder_linear/test_autoencoder_linear.py::test_autoencoder_linear[full-eval] |

| DistilBert | base uncased | tests/models/distilbert/test_distilbert.py::test_distilbert[full-distilbert-base-uncased-eval] |

| Llama | 3B | tests/models/llama/test_llama_3b.py::test_llama_3b[full-meta-llama/Llama-3.2-3B-eval] |

| MLPMixer | tests/models/mlpmixer/test_mlpmixer.py::test_mlpmixer[full-eval] | |

| MNist | pytest -svv tests/models/mnist/test_mnist.py::test_mnist_train[single_device-full-eval] | |

| MobileNet V2 | tests/models/MobileNetV2/test_MobileNetV2.py::test_MobileNetV2[full-eval] | |

| TorchVision | tests/models/torchvision/test_torchvision_image_classification.py::test_torchvision_image_classification[single_device-full-mobilenet_v2] | |

| MobileNet V3 | Small TorchVision | tests/models/torchvision/test_torchvision_image_classification.py::test_torchvision_image_classification[single_device-full-mobilenet_v3_small] |

| Large TorchVision | tests/models/torchvision/test_torchvision_image_classification.py::test_torchvision_image_classification[single_device-full-mobilenet_v3_large] | |

| OpenPose | tests/models/openpose/test_openpose_v2.py::test_openpose_v2[full-eval] | |

| Preciever_IO | tests/models/perceiver_io/test_perceiver_io.py::test_perceiver_io[full-eval] | |

| ResNet | 18 | tests/models/resnet/test_resnet.py::test_resnet[single_device-full-eval] |

| 18 TorchVision | tests/models/torchvision/test_torchvision_image_classification.py::test_torchvision_image_classification[single_device-full-resnet18] | |

| 34 TorchVision | tests/models/torchvision/test_torchvision_image_classification.py::test_torchvision_image_classification[single_device-full-resnet34] | |

| 50 | tests/models/resnet50/test_resnet50.py::test_resnet[single_device-full-eval] | |

| 50 TorchVision | tests/models/torchvision/test_torchvision_image_classification.py::test_torchvision_image_classification[single_device-full-resnet50] | |

| 101 TorchVision | tests/models/torchvision/test_torchvision_image_classification.py::test_torchvision_image_classification[single_device-full-resnet101] | |

| 152 TorchVision | tests/models/torchvision/test_torchvision_image_classification.py::test_torchvision_image_classification[single_device-full-resnet152] | |

| Wide ResNet | 50 | tests/models/torchvision/test_torchvision_image_classification.py::test_torchvision_image_classification[single_device-full-wide_resnet50_2] |

| 101 | tests/models/torchvision/test_torchvision_image_classification.py::test_torchvision_image_classification[single_device-full-wide_resnet101_2] | |

| ResNext | 50 | tests/models/torchvision/test_torchvision_image_classification.py::test_torchvision_image_classification[single_device-full-resnext50_32x4d] |

| 101_32x8d | tests/models/torchvision/test_torchvision_image_classification.py::test_torchvision_image_classification[single_device-full-resnext101_32x8d] | |

| 101_64x4d | tests/models/torchvision/test_torchvision_image_classification.py::test_torchvision_image_classification[single_device-full-resnext101_64x4d] | |

| Regnet | y 400 | tests/models/torchvision/test_torchvision_image_classification.py::test_torchvision_image_classification[single_device-full-regnet_y_400mf] |

| y 800 | tests/models/torchvision/test_torchvision_image_classification.py::test_torchvision_image_classification[single_device-full-regnet_y_800mf] | |

| y 1 6 | tests/models/torchvision/test_torchvision_image_classification.py::test_torchvision_image_classification[single_device-full-regnet_y_1_6gf] | |

| y 3 2 | tests/models/torchvision/test_torchvision_image_classification.py::test_torchvision_image_classification[single_device-full-regnet_y_3_2gf] | |

| y 8 | tests/models/torchvision/test_torchvision_image_classification.py::test_torchvision_image_classification[single_device-full-regnet_y_8gf] | |

| y 16 | tests/models/torchvision/test_torchvision_image_classification.py::test_torchvision_image_classification[single_device-full-regnet_y_16gf] | |

| y 32 | tests/models/torchvision/test_torchvision_image_classification.py::test_torchvision_image_classification[single_device-full-regnet_y_32gf] | |

| x 400 | tests/models/torchvision/test_torchvision_image_classification.py::test_torchvision_image_classification[single_device-full-regnet_x_400mf] | |

| x 800 | tests/models/torchvision/test_torchvision_image_classification.py::test_torchvision_image_classification[single_device-full-regnet_x_800mf] | |

| x 1 6 | tests/models/torchvision/test_torchvision_image_classification.py::test_torchvision_image_classification[single_device-full-regnet_x_1_6gf] | |

| x 3 2 | tests/models/torchvision/test_torchvision_image_classification.py::test_torchvision_image_classification[single_device-full-regnet_x_3_2gf] | |

| x 8 | tests/models/torchvision/test_torchvision_image_classification.py::test_torchvision_image_classification[single_device-full-regnet_x_8gf] | |

| x 16 | tests/models/torchvision/test_torchvision_image_classification.py::test_torchvision_image_classification[single_device-full-regnet_x_16gf] | |

| x 32 | tests/models/torchvision/test_torchvision_image_classification.py::test_torchvision_image_classification[single_device-full-regnet_x_32gf] | |

| Yolo | V3 | tests/models/yolov3/test_yolov3.py::test_yolov3[full-eval] |

Ops Documentation

NOTE: TT-Torch is deprecated. To work with PyTorch and the various features available in TT-Torch, please see the documentation for TT-XLA.

This section contains documentation for Ops operations.

Stablehlo Documentation

NOTE: TT-Torch is deprecated. To work with PyTorch and the various features available in TT-Torch, please see the documentation for TT-XLA.

This section contains documentation for Stablehlo operations.

arith.constant

| STABLE HLO Input Variations | ttnn op | Torch Name | Status | |

|---|---|---|---|---|

| 0 | Tensor<[1]>, | aten::_safe_softmax | 4 |

stablehlo.add::ttnn.add

| STABLE HLO Input Variations | ttnn op | Torch Name | Status | |

|---|---|---|---|---|

| 0 | Tensor<[256,256]>, Tensor<[256,256]>, | ttnn.add | aten::add.Tensor | 6 |

| 1 | Tensor<[1,1,32,32]>, Tensor<[1,1,32,32]>, | ttnn.add | aten::add.Tensor | 4 |

| 2 | Tensor<[1,32,1]>, Tensor<[1,32,1]>, | ttnn.add | aten::add.Tensor | 4 |

| 3 | Tensor<[1,32,32,128]>, Tensor<[1,32,32,128]>, | ttnn.add | aten::add.Tensor | 5 |

| 4 | Tensor<[1,32,32,32]>, Tensor<[1,32,32,32]>, | ttnn.add | aten::add.Tensor | 4 |

| 5 | Tensor<[1,32,4096]>, Tensor<[1,32,4096]>, | ttnn.add | aten::add.Tensor | 5 |

| 6 | Tensor<[32]>, Tensor<[32]>, | ttnn.add | aten::arange | 4 |

| 7 | Tensor<[32,1]>, Tensor<[32,1]>, | ttnn.add | aten::triu | 4 |

| 8 | Tensor<[1,7,768]>, Tensor<[1,7,768]>, | ttnn.add | aten::add.Tensor | 5 |

| 9 | Tensor<[7]>, Tensor<[7]>, | ttnn.add | aten::add.Tensor | 4 |

| 10 | Tensor<[1,7,1]>, Tensor<[1,7,1]>, | ttnn.add | aten::add.Tensor | 4 |

| 11 | Tensor<[7,2304]>, Tensor<[7,2304]>, | ttnn.add | aten::add.Tensor | 4 |

| 12 | Tensor<[1,12,7,7]>, Tensor<[1,12,7,7]>, | ttnn.add | aten::add.Tensor | 4 |

| 13 | Tensor<[7,768]>, Tensor<[7,768]>, | ttnn.add | aten::add.Tensor | 4 |

| 14 | Tensor<[7,3072]>, Tensor<[7,3072]>, | ttnn.add | aten::add.Tensor | 4 |

| 15 | Tensor<[1,7,3072]>, Tensor<[1,7,3072]>, | ttnn.add | aten::add.Tensor | 5 |

| 16 | Tensor<[1]>, Tensor<[1]>, | ttnn.add | aten::arange | 4 |

| 17 | Tensor<[1,32,112,112]>, Tensor<[1,32,112,112]>, | ttnn.add | aten::add.Tensor | 4 |

| 18 | Tensor<[64]>, Tensor<[64]>, | ttnn.add | aten::add.Tensor | 4 |

| 19 | Tensor<[1,64,112,112]>, Tensor<[1,64,112,112]>, | ttnn.add | aten::add.Tensor | 4 |

| 20 | Tensor<[1,64,56,56]>, Tensor<[1,64,56,56]>, | ttnn.add | aten::add.Tensor | 4 |

| 21 | Tensor<[128]>, Tensor<[128]>, | ttnn.add | aten::add.Tensor | 4 |

| 22 | Tensor<[1,128,56,56]>, Tensor<[1,128,56,56]>, | ttnn.add | aten::add.Tensor | 4 |

| 23 | Tensor<[1,128,28,28]>, Tensor<[1,128,28,28]>, | ttnn.add | aten::add.Tensor | 4 |

| 24 | Tensor<[256]>, Tensor<[256]>, | ttnn.add | aten::add.Tensor | 4 |

| 25 | Tensor<[1,256,28,28]>, Tensor<[1,256,28,28]>, | ttnn.add | aten::add.Tensor | 4 |

| 26 | Tensor<[512]>, Tensor<[512]>, | ttnn.add | aten::add.Tensor | 4 |

| 27 | Tensor<[1,512,28,28]>, Tensor<[1,512,28,28]>, | ttnn.add | aten::add.Tensor | 4 |

| 28 | Tensor<[1,19,28,28]>, Tensor<[1,19,28,28]>, | ttnn.add | aten::convolution | 4 |

| 29 | Tensor<[1,38,28,28]>, Tensor<[1,38,28,28]>, | ttnn.add | aten::convolution | 4 |

| 30 | Tensor<[256,512]>, Tensor<[256,512]>, | ttnn.add | aten::add.Tensor | 4 |

| 31 | Tensor<[1,256,1]>, Tensor<[1,256,1]>, | ttnn.add | aten::add.Tensor | 4 |

| 32 | Tensor<[1,256,512]>, Tensor<[1,256,512]>, | ttnn.add | aten::add.Tensor | 4 |

| 33 | Tensor<[1,1000]>, Tensor<[1,1000]>, | ttnn.add | aten::add.Tensor | 4 |

| 34 | Tensor<[1,1024,512]>, Tensor<[1,1024,512]>, | ttnn.add | aten::convolution | 4 |

| 35 | Tensor<[1,256,256]>, Tensor<[1,256,256]>, | ttnn.add | aten::gelu | 4 |

| 36 | Tensor<[1,64,1,1]>, Tensor<[1,64,1,1]>, | ttnn.add | aten::add.Tensor | 4 |

| 37 | Tensor<[1,64,360,640]>, Tensor<[1,64,360,640]>, | ttnn.add | aten::add.Tensor | 4 |

| 38 | Tensor<[1,64,180,320]>, Tensor<[1,64,180,320]>, | ttnn.add | aten::add.Tensor | 4 |

| 39 | Tensor<[1,256,1,1]>, Tensor<[1,256,1,1]>, | ttnn.add | aten::add.Tensor | 4 |

| 40 | Tensor<[1,256,180,320]>, Tensor<[1,256,180,320]>, | ttnn.add | aten::add.Tensor | 4 |

| 41 | Tensor<[1,128,1,1]>, Tensor<[1,128,1,1]>, | ttnn.add | aten::add.Tensor | 4 |

| 42 | Tensor<[1,128,180,320]>, Tensor<[1,128,180,320]>, | ttnn.add | aten::add.Tensor | 4 |

| 43 | Tensor<[1,128,90,160]>, Tensor<[1,128,90,160]>, | ttnn.add | aten::add.Tensor | 4 |

| 44 | Tensor<[1,512,1,1]>, Tensor<[1,512,1,1]>, | ttnn.add | aten::add.Tensor | 4 |

| 45 | Tensor<[1,512,90,160]>, Tensor<[1,512,90,160]>, | ttnn.add | aten::add.Tensor | 4 |

| 46 | Tensor<[1,256,90,160]>, Tensor<[1,256,90,160]>, | ttnn.add | aten::add.Tensor | 4 |

| 47 | Tensor<[1,256,45,80]>, Tensor<[1,256,45,80]>, | ttnn.add | aten::add.Tensor | 4 |

| 48 | Tensor<[1,1024,1,1]>, Tensor<[1,1024,1,1]>, | ttnn.add | aten::add.Tensor | 4 |

| 49 | Tensor<[1,1024,45,80]>, Tensor<[1,1024,45,80]>, | ttnn.add | aten::add.Tensor | 4 |

| 50 | Tensor<[1,512,45,80]>, Tensor<[1,512,45,80]>, | ttnn.add | aten::add.Tensor | 4 |

| 51 | Tensor<[1,512,23,40]>, Tensor<[1,512,23,40]>, | ttnn.add | aten::add.Tensor | 4 |

| 52 | Tensor<[1,2048,1,1]>, Tensor<[1,2048,1,1]>, | ttnn.add | aten::add.Tensor | 4 |

| 53 | Tensor<[1,2048,23,40]>, Tensor<[1,2048,23,40]>, | ttnn.add | aten::add.Tensor | 4 |

| 54 | Tensor<[23]>, Tensor<[23]>, | ttnn.add | aten::add.Tensor | 4 |

| 55 | Tensor<[40]>, Tensor<[40]>, | ttnn.add | aten::add.Tensor | 4 |

| 56 | Tensor<[1,1,40]>, Tensor<[1,1,40]>, | ttnn.add | aten::add.Tensor | 4 |

| 57 | Tensor<[1,23,1]>, Tensor<[1,23,1]>, | ttnn.add | aten::add.Tensor | 4 |

| 58 | Tensor<[920,1,256]>, Tensor<[920,1,256]>, | ttnn.add | aten::add.Tensor | 5 |

| 59 | Tensor<[920,256]>, Tensor<[920,256]>, | ttnn.add | aten::add.Tensor | 4 |

| 60 | Tensor<[920,1,1]>, Tensor<[920,1,1]>, | ttnn.add | aten::add.Tensor | 4 |

| 61 | Tensor<[920,2048]>, Tensor<[920,2048]>, | ttnn.add | aten::add.Tensor | 4 |

| 62 | Tensor<[100,1,256]>, Tensor<[100,1,256]>, | ttnn.add | aten::add.Tensor | 5 |

| 63 | Tensor<[100,256]>, Tensor<[100,256]>, | ttnn.add | aten::add.Tensor | 4 |

| 64 | Tensor<[100,1,1]>, Tensor<[100,1,1]>, | ttnn.add | aten::add.Tensor | 4 |

| 65 | Tensor<[100,2048]>, Tensor<[100,2048]>, | ttnn.add | aten::add.Tensor | 4 |

| 66 | Tensor<[6,1,100,92]>, Tensor<[6,1,100,92]>, | ttnn.add | aten::add.Tensor | 4 |

| 67 | Tensor<[6,1,100,256]>, Tensor<[6,1,100,256]>, | ttnn.add | aten::add.Tensor | 4 |

| 68 | Tensor<[6,1,100,4]>, Tensor<[6,1,100,4]>, | ttnn.add | aten::add.Tensor | 4 |

| 69 | Tensor<[8,920,920]>, Tensor<[8,920,920]>, | ttnn.add | aten::baddbmm | 4 |

| 70 | Tensor<[8,100,920]>, Tensor<[8,100,920]>, | ttnn.add | aten::baddbmm | 4 |

| 71 | Tensor<[1,256,23,40]>, Tensor<[1,256,23,40]>, | ttnn.add | aten::convolution | 4 |

| 72 | Tensor<[1,10]>, Tensor<[1,10]>, | ttnn.add | aten::add.Tensor | 5 |

| 73 | Tensor<[1,10,768]>, Tensor<[1,10,768]>, | ttnn.add | aten::add.Tensor | 5 |

| 74 | Tensor<[1,10,1]>, Tensor<[1,10,1]>, | ttnn.add | aten::add.Tensor | 4 |

| 75 | Tensor<[10,768]>, Tensor<[10,768]>, | ttnn.add | aten::add.Tensor | 4 |

| 76 | Tensor<[1,12,10,10]>, Tensor<[1,12,10,10]>, | ttnn.add | aten::add.Tensor | 4 |

| 77 | Tensor<[10,3072]>, Tensor<[10,3072]>, | ttnn.add | aten::add.Tensor | 4 |

| 78 | Tensor<[10,250002]>, Tensor<[10,250002]>, | ttnn.add | aten::add.Tensor | 4 |

| 79 | Tensor<[1,10,3072]>, Tensor<[1,10,3072]>, | ttnn.add | aten::gelu | 4 |

| 80 | Tensor<[1,1280]>, Tensor<[1,1280]>, | ttnn.add | aten::add.Tensor | 4 |

| 81 | Tensor<[1,32,1,1]>, Tensor<[1,32,1,1]>, | ttnn.add | aten::add.Tensor | 4 |

| 82 | Tensor<[1,320,64,64]>, Tensor<[1,320,64,64]>, | ttnn.add | aten::add.Tensor | 4 |

| 83 | Tensor<[1,320]>, Tensor<[1,320]>, | ttnn.add | aten::add.Tensor | 4 |

| 84 | Tensor<[1,4096,1]>, Tensor<[1,4096,1]>, | ttnn.add | aten::add.Tensor | 4 |

| 85 | Tensor<[1,4096,320]>, Tensor<[1,4096,320]>, | ttnn.add | aten::add.Tensor | 4 |

| 86 | Tensor<[4096,320]>, Tensor<[4096,320]>, | ttnn.add | aten::add.Tensor | 4 |

| 87 | Tensor<[4096,2560]>, Tensor<[4096,2560]>, | ttnn.add | aten::add.Tensor | 4 |

| 88 | Tensor<[1,320,32,32]>, Tensor<[1,320,32,32]>, | ttnn.add | aten::add.Tensor | 4 |

| 89 | Tensor<[1,640]>, Tensor<[1,640]>, | ttnn.add | aten::add.Tensor | 4 |

| 90 | Tensor<[1,640,32,32]>, Tensor<[1,640,32,32]>, | ttnn.add | aten::add.Tensor | 4 |

| 91 | Tensor<[1,1024,1]>, Tensor<[1,1024,1]>, | ttnn.add | aten::add.Tensor | 4 |

| 92 | Tensor<[1,1024,640]>, Tensor<[1,1024,640]>, | ttnn.add | aten::add.Tensor | 4 |

| 93 | Tensor<[1024,640]>, Tensor<[1024,640]>, | ttnn.add | aten::add.Tensor | 4 |

| 94 | Tensor<[1024,5120]>, Tensor<[1024,5120]>, | ttnn.add | aten::add.Tensor | 4 |

| 95 | Tensor<[1,640,16,16]>, Tensor<[1,640,16,16]>, | ttnn.add | aten::add.Tensor | 4 |

| 96 | Tensor<[1,1280,16,16]>, Tensor<[1,1280,16,16]>, | ttnn.add | aten::add.Tensor | 4 |

| 97 | Tensor<[1,256,1280]>, Tensor<[1,256,1280]>, | ttnn.add | aten::add.Tensor | 4 |

| 98 | Tensor<[256,1280]>, Tensor<[256,1280]>, | ttnn.add | aten::add.Tensor | 4 |

| 99 | Tensor<[256,10240]>, Tensor<[256,10240]>, | ttnn.add | aten::add.Tensor | 4 |

| 100 | Tensor<[1,1280,8,8]>, Tensor<[1,1280,8,8]>, | ttnn.add | aten::add.Tensor | 4 |

| 101 | Tensor<[1,64,1]>, Tensor<[1,64,1]>, | ttnn.add | aten::add.Tensor | 4 |

| 102 | Tensor<[1,64,1280]>, Tensor<[1,64,1280]>, | ttnn.add | aten::add.Tensor | 4 |

| 103 | Tensor<[64,1280]>, Tensor<[64,1280]>, | ttnn.add | aten::add.Tensor | 4 |

| 104 | Tensor<[64,10240]>, Tensor<[64,10240]>, | ttnn.add | aten::add.Tensor | 4 |

| 105 | Tensor<[1,2560,8,8]>, Tensor<[1,2560,8,8]>, | ttnn.add | aten::add.Tensor | 4 |

| 106 | Tensor<[16]>, Tensor<[16]>, | ttnn.add | aten::add.Tensor | 4 |

| 107 | Tensor<[1,2560,16,16]>, Tensor<[1,2560,16,16]>, | ttnn.add | aten::add.Tensor | 4 |

| 108 | Tensor<[1,1920,16,16]>, Tensor<[1,1920,16,16]>, | ttnn.add | aten::add.Tensor | 4 |

| 109 | Tensor<[1,1920,32,32]>, Tensor<[1,1920,32,32]>, | ttnn.add | aten::add.Tensor | 4 |

| 110 | Tensor<[1,1280,32,32]>, Tensor<[1,1280,32,32]>, | ttnn.add | aten::add.Tensor | 4 |

| 111 | Tensor<[1,960,32,32]>, Tensor<[1,960,32,32]>, | ttnn.add | aten::add.Tensor | 4 |

| 112 | Tensor<[1,960,64,64]>, Tensor<[1,960,64,64]>, | ttnn.add | aten::add.Tensor | 4 |

| 113 | Tensor<[1,640,64,64]>, Tensor<[1,640,64,64]>, | ttnn.add | aten::add.Tensor | 4 |

| 114 | Tensor<[160]>, Tensor<[160]>, | ttnn.add | aten::arange.start | 4 |

| 115 | Tensor<[1,4,64,64]>, Tensor<[1,4,64,64]>, | ttnn.add | aten::convolution | 4 |

| 116 | Tensor<[1,4096,1280]>, Tensor<[1,4096,1280]>, | ttnn.add | aten::gelu | 4 |

| 117 | Tensor<[1,1024,2560]>, Tensor<[1,1024,2560]>, | ttnn.add | aten::gelu | 4 |

| 118 | Tensor<[1,256,5120]>, Tensor<[1,256,5120]>, | ttnn.add | aten::gelu | 4 |

| 119 | Tensor<[1,64,5120]>, Tensor<[1,64,5120]>, | ttnn.add | aten::gelu | 4 |

| 120 | Tensor<[1280]>, Tensor<[1280]>, | ttnn.add | aten::index.Tensor | 4 |

| 121 | Tensor<[640]>, Tensor<[640]>, | ttnn.add | aten::index.Tensor | 4 |

| 122 | Tensor<[1,25,768]>, Tensor<[1,25,768]>, | ttnn.add | aten::add.Tensor | 5 |

| 123 | Tensor<[1,25,1]>, Tensor<[1,25,1]>, | ttnn.add | aten::add.Tensor | 4 |

| 124 | Tensor<[25,768]>, Tensor<[25,768]>, | ttnn.add | aten::add.Tensor | 4 |

| 125 | Tensor<[1,12,25,25]>, Tensor<[1,12,25,25]>, | ttnn.add | aten::add.Tensor | 4 |

| 126 | Tensor<[25,3072]>, Tensor<[25,3072]>, | ttnn.add | aten::add.Tensor | 4 |

| 127 | Tensor<[25,2]>, Tensor<[25,2]>, | ttnn.add | aten::add.Tensor | 4 |

| 128 | Tensor<[1,1]>, Tensor<[1,1]>, | ttnn.add | aten::add.Tensor | 4 |

| 129 | Tensor<[1,25,3072]>, Tensor<[1,25,3072]>, | ttnn.add | aten::gelu | 4 |

| 130 | Tensor<[1,1445,192]>, Tensor<[1,1445,192]>, | ttnn.add | aten::add.Tensor | 5 |

| 131 | Tensor<[1,1445,1]>, Tensor<[1,1445,1]>, | ttnn.add | aten::add.Tensor | 4 |

| 132 | Tensor<[1445,192]>, Tensor<[1445,192]>, | ttnn.add | aten::add.Tensor | 4 |

| 133 | Tensor<[1445,768]>, Tensor<[1445,768]>, | ttnn.add | aten::add.Tensor | 4 |

| 134 | Tensor<[100,192]>, Tensor<[100,192]>, | ttnn.add | aten::add.Tensor | 4 |

| 135 | Tensor<[100,92]>, Tensor<[100,92]>, | ttnn.add | aten::add.Tensor | 4 |

| 136 | Tensor<[100,4]>, Tensor<[100,4]>, | ttnn.add | aten::add.Tensor | 4 |

| 137 | Tensor<[1,192,32,42]>, Tensor<[1,192,32,42]>, | ttnn.add | aten::convolution | 4 |

| 138 | Tensor<[1,1445,768]>, Tensor<[1,1445,768]>, | ttnn.add | aten::gelu | 4 |

| 139 | Tensor<[1,256,14,14]>, Tensor<[1,256,14,14]>, | ttnn.add | aten::add.Tensor | 4 |

| 140 | Tensor<[1,512,7,7]>, Tensor<[1,512,7,7]>, | ttnn.add | aten::add.Tensor | 4 |

| 141 | Tensor<[1,8,768]>, Tensor<[1,8,768]>, | ttnn.add | aten::add.Tensor | 5 |

| 142 | Tensor<[1,8,1]>, Tensor<[1,8,1]>, | ttnn.add | aten::add.Tensor | 4 |

| 143 | Tensor<[1,12,8,8]>, Tensor<[1,12,8,8]>, | ttnn.add | aten::add.Tensor | 4 |

| 144 | Tensor<[1,768,8]>, Tensor<[1,768,8]>, | ttnn.add | aten::add.Tensor | 5 |

| 145 | Tensor<[1,768]>, Tensor<[1,768]>, | ttnn.add | aten::add.Tensor | 4 |

| 146 | Tensor<[1,3]>, Tensor<[1,3]>, | ttnn.add | aten::add.Tensor | 4 |

| 147 | Tensor<[1,3072,8]>, Tensor<[1,3072,8]>, | ttnn.add | aten::convolution | 4 |

| 148 | Tensor<[1,2048,768]>, Tensor<[1,2048,768]>, | ttnn.add | aten::add.Tensor | 4 |

| 149 | Tensor<[1,2048,1]>, Tensor<[1,2048,1]>, | ttnn.add | aten::add.Tensor | 4 |

| 150 | Tensor<[2048,256]>, Tensor<[2048,256]>, | ttnn.add | aten::add.Tensor | 4 |

| 151 | Tensor<[2048,1280]>, Tensor<[2048,1280]>, | ttnn.add | aten::add.Tensor | 4 |

| 152 | Tensor<[1,8,256,2048]>, Tensor<[1,8,256,2048]>, | ttnn.add | aten::add.Tensor | 4 |

| 153 | Tensor<[256,768]>, Tensor<[256,768]>, | ttnn.add | aten::add.Tensor | 4 |

| 154 | Tensor<[2048,768]>, Tensor<[2048,768]>, | ttnn.add | aten::add.Tensor | 4 |

| 155 | Tensor<[2048,262]>, Tensor<[2048,262]>, | ttnn.add | aten::add.Tensor | 4 |

| 156 | Tensor<[2048]>, Tensor<[2048]>, | ttnn.add | aten::arange.start | 4 |

| 157 | Tensor<[1,256,56,56]>, Tensor<[1,256,56,56]>, | ttnn.add | aten::add.Tensor | 4 |

| 158 | Tensor<[1024]>, Tensor<[1024]>, | ttnn.add | aten::add.Tensor | 4 |

| 159 | Tensor<[1,1024,14,14]>, Tensor<[1,1024,14,14]>, | ttnn.add | aten::add.Tensor | 4 |

| 160 | Tensor<[1,512,14,14]>, Tensor<[1,512,14,14]>, | ttnn.add | aten::add.Tensor | 4 |

| 161 | Tensor<[1,2048,7,7]>, Tensor<[1,2048,7,7]>, | ttnn.add | aten::add.Tensor | 4 |

| 162 | Tensor<[12]>, Tensor<[12]>, | ttnn.add | aten::add.Tensor | 4 |

| 163 | Tensor<[1,193,768]>, Tensor<[1,193,768]>, | ttnn.add | aten::add.Tensor | 5 |

| 164 | Tensor<[1,201,1]>, Tensor<[1,201,1]>, | ttnn.add | aten::add.Tensor | 4 |

| 165 | Tensor<[1,201,768]>, Tensor<[1,201,768]>, | ttnn.add | aten::add.Tensor | 4 |

| 166 | Tensor<[201,768]>, Tensor<[201,768]>, | ttnn.add | aten::add.Tensor | 4 |

| 167 | Tensor<[1,12,201,201]>, Tensor<[1,12,201,201]>, | ttnn.add | aten::add.Tensor | 4 |

| 168 | Tensor<[201,3072]>, Tensor<[201,3072]>, | ttnn.add | aten::add.Tensor | 4 |

| 169 | Tensor<[1,1536]>, Tensor<[1,1536]>, | ttnn.add | aten::add.Tensor | 4 |

| 170 | Tensor<[1,3129]>, Tensor<[1,3129]>, | ttnn.add | aten::add.Tensor | 4 |

| 171 | Tensor<[1,768,12,16]>, Tensor<[1,768,12,16]>, | ttnn.add | aten::convolution | 4 |

| 172 | Tensor<[1,201,3072]>, Tensor<[1,201,3072]>, | ttnn.add | aten::gelu | 4 |

| 173 | Tensor<[1,128]>, Tensor<[1,128]>, | ttnn.add | aten::add.Tensor | 4 |

| 174 | Tensor<[1,32,26,26]>, Tensor<[1,32,26,26]>, | ttnn.add | aten::convolution | 4 |

| 175 | Tensor<[1,64,24,24]>, Tensor<[1,64,24,24]>, | ttnn.add | aten::convolution | 4 |

| 176 | Tensor<[19]>, Tensor<[19]>, | ttnn.add | aten::add.Tensor | 4 |

| 177 | Tensor<[1,19]>, Tensor<[1,19]>, | ttnn.add | aten::add.Tensor | 4 |

| 178 | Tensor<[1,19,1024]>, Tensor<[1,19,1024]>, | ttnn.add | aten::add.Tensor | 5 |

| 179 | Tensor<[1,19,1]>, Tensor<[1,19,1]>, | ttnn.add | aten::add.Tensor | 4 |

| 180 | Tensor<[19,1024]>, Tensor<[19,1024]>, | ttnn.add | aten::add.Tensor | 4 |

| 181 | Tensor<[1,16,19,19]>, Tensor<[1,16,19,19]>, | ttnn.add | aten::add.Tensor | 4 |

| 182 | Tensor<[19,4096]>, Tensor<[19,4096]>, | ttnn.add | aten::add.Tensor | 4 |

| 183 | Tensor<[1,19,4096]>, Tensor<[1,19,4096]>, | ttnn.add | aten::gelu | 4 |

| 184 | Tensor<[14]>, Tensor<[14]>, | ttnn.add | aten::add.Tensor | 4 |

| 185 | Tensor<[1,14,56,56]>, Tensor<[1,14,56,56]>, | ttnn.add | aten::add.Tensor | 4 |

| 186 | Tensor<[24]>, Tensor<[24]>, | ttnn.add | aten::add.Tensor | 4 |

| 187 | Tensor<[1,24,56,56]>, Tensor<[1,24,56,56]>, | ttnn.add | aten::add.Tensor | 4 |

| 188 | Tensor<[1,40,56,56]>, Tensor<[1,40,56,56]>, | ttnn.add | aten::add.Tensor | 4 |

| 189 | Tensor<[68]>, Tensor<[68]>, | ttnn.add | aten::add.Tensor | 4 |

| 190 | Tensor<[1,68,56,56]>, Tensor<[1,68,56,56]>, | ttnn.add | aten::add.Tensor | 4 |

| 191 | Tensor<[1,16,28,28]>, Tensor<[1,16,28,28]>, | ttnn.add | aten::add.Tensor | 4 |

| 192 | Tensor<[28]>, Tensor<[28]>, | ttnn.add | aten::add.Tensor | 4 |

| 193 | Tensor<[1,28,28,28]>, Tensor<[1,28,28,28]>, | ttnn.add | aten::add.Tensor | 4 |

| 194 | Tensor<[46]>, Tensor<[46]>, | ttnn.add | aten::add.Tensor | 4 |

| 195 | Tensor<[1,46,28,28]>, Tensor<[1,46,28,28]>, | ttnn.add | aten::add.Tensor | 4 |

| 196 | Tensor<[78]>, Tensor<[78]>, | ttnn.add | aten::add.Tensor | 4 |

| 197 | Tensor<[1,78,28,28]>, Tensor<[1,78,28,28]>, | ttnn.add | aten::add.Tensor | 4 |

| 198 | Tensor<[134]>, Tensor<[134]>, | ttnn.add | aten::add.Tensor | 4 |

| 199 | Tensor<[1,134,28,28]>, Tensor<[1,134,28,28]>, | ttnn.add | aten::add.Tensor | 4 |

| 200 | Tensor<[20]>, Tensor<[20]>, | ttnn.add | aten::add.Tensor | 4 |

| 201 | Tensor<[1,20,28,28]>, Tensor<[1,20,28,28]>, | ttnn.add | aten::add.Tensor | 4 |

| 202 | Tensor<[34]>, Tensor<[34]>, | ttnn.add | aten::add.Tensor | 4 |

| 203 | Tensor<[1,34,28,28]>, Tensor<[1,34,28,28]>, | ttnn.add | aten::add.Tensor | 4 |

| 204 | Tensor<[58]>, Tensor<[58]>, | ttnn.add | aten::add.Tensor | 4 |

| 205 | Tensor<[1,58,28,28]>, Tensor<[1,58,28,28]>, | ttnn.add | aten::add.Tensor | 4 |

| 206 | Tensor<[98]>, Tensor<[98]>, | ttnn.add | aten::add.Tensor | 4 |

| 207 | Tensor<[1,98,28,28]>, Tensor<[1,98,28,28]>, | ttnn.add | aten::add.Tensor | 4 |

| 208 | Tensor<[168]>, Tensor<[168]>, | ttnn.add | aten::add.Tensor | 4 |

| 209 | Tensor<[1,168,28,28]>, Tensor<[1,168,28,28]>, | ttnn.add | aten::add.Tensor | 4 |

| 210 | Tensor<[320]>, Tensor<[320]>, | ttnn.add | aten::add.Tensor | 4 |

| 211 | Tensor<[1,320,28,28]>, Tensor<[1,320,28,28]>, | ttnn.add | aten::add.Tensor | 4 |

| 212 | Tensor<[1,40,14,14]>, Tensor<[1,40,14,14]>, | ttnn.add | aten::add.Tensor | 4 |

| 213 | Tensor<[1,68,14,14]>, Tensor<[1,68,14,14]>, | ttnn.add | aten::add.Tensor | 4 |

| 214 | Tensor<[116]>, Tensor<[116]>, | ttnn.add | aten::add.Tensor | 4 |

| 215 | Tensor<[1,116,14,14]>, Tensor<[1,116,14,14]>, | ttnn.add | aten::add.Tensor | 4 |

| 216 | Tensor<[196]>, Tensor<[196]>, | ttnn.add | aten::add.Tensor | 4 |

| 217 | Tensor<[1,196,14,14]>, Tensor<[1,196,14,14]>, | ttnn.add | aten::add.Tensor | 4 |

| 218 | Tensor<[334]>, Tensor<[334]>, | ttnn.add | aten::add.Tensor | 4 |

| 219 | Tensor<[1,334,14,14]>, Tensor<[1,334,14,14]>, | ttnn.add | aten::add.Tensor | 4 |

| 220 | Tensor<[1,640,14,14]>, Tensor<[1,640,14,14]>, | ttnn.add | aten::add.Tensor | 4 |

| 221 | Tensor<[1,160,7,7]>, Tensor<[1,160,7,7]>, | ttnn.add | aten::add.Tensor | 4 |

| 222 | Tensor<[272]>, Tensor<[272]>, | ttnn.add | aten::add.Tensor | 4 |

| 223 | Tensor<[1,272,7,7]>, Tensor<[1,272,7,7]>, | ttnn.add | aten::add.Tensor | 4 |

| 224 | Tensor<[462]>, Tensor<[462]>, | ttnn.add | aten::add.Tensor | 4 |

| 225 | Tensor<[1,462,7,7]>, Tensor<[1,462,7,7]>, | ttnn.add | aten::add.Tensor | 4 |

| 226 | Tensor<[1,1024,7,7]>, Tensor<[1,1024,7,7]>, | ttnn.add | aten::add.Tensor | 4 |

| 227 | Tensor<[1,32,512,512]>, Tensor<[1,32,512,512]>, | ttnn.add | aten::add.Tensor | 4 |

| 228 | Tensor<[1,64,256,256]>, Tensor<[1,64,256,256]>, | ttnn.add | aten::add.Tensor | 4 |

| 229 | Tensor<[1,32,256,256]>, Tensor<[1,32,256,256]>, | ttnn.add | aten::add.Tensor | 4 |

| 230 | Tensor<[1,128,128,128]>, Tensor<[1,128,128,128]>, | ttnn.add | aten::add.Tensor | 4 |

| 231 | Tensor<[1,64,128,128]>, Tensor<[1,64,128,128]>, | ttnn.add | aten::add.Tensor | 4 |

| 232 | Tensor<[1,256,64,64]>, Tensor<[1,256,64,64]>, | ttnn.add | aten::add.Tensor | 4 |

| 233 | Tensor<[1,128,64,64]>, Tensor<[1,128,64,64]>, | ttnn.add | aten::add.Tensor | 4 |

| 234 | Tensor<[1,512,32,32]>, Tensor<[1,512,32,32]>, | ttnn.add | aten::add.Tensor | 4 |

| 235 | Tensor<[1,256,32,32]>, Tensor<[1,256,32,32]>, | ttnn.add | aten::add.Tensor | 4 |

| 236 | Tensor<[1,1024,16,16]>, Tensor<[1,1024,16,16]>, | ttnn.add | aten::add.Tensor | 4 |

| 237 | Tensor<[1,512,16,16]>, Tensor<[1,512,16,16]>, | ttnn.add | aten::add.Tensor | 4 |

| 238 | Tensor<[1,256,16,16]>, Tensor<[1,256,16,16]>, | ttnn.add | aten::add.Tensor | 4 |

| 239 | Tensor<[1,128,32,32]>, Tensor<[1,128,32,32]>, | ttnn.add | aten::add.Tensor | 4 |

| 240 | Tensor<[1,255,16,16]>, Tensor<[1,255,16,16]>, | ttnn.add | aten::convolution | 4 |

| 241 | Tensor<[1,255,32,32]>, Tensor<[1,255,32,32]>, | ttnn.add | aten::convolution | 4 |

| 242 | Tensor<[1,255,64,64]>, Tensor<[1,255,64,64]>, | ttnn.add | aten::convolution | 4 |

| 243 | Tensor<[1,1,256,256]>, Tensor<[1,1,256,256]>, | ttnn.add | aten::convolution | 4 |

| 244 | Tensor<[1,4,14,14]>, Tensor<[1,4,14,14]>, | ttnn.add | aten::convolution | 4 |

| 245 | Tensor<[1,16,14,14]>, Tensor<[1,16,14,14]>, | ttnn.add | aten::convolution | 4 |

| 246 | Tensor<[1,1,28,28]>, Tensor<[1,1,28,28]>, | ttnn.add | aten::convolution | 4 |

| 247 | Tensor<[1,32,1536]>, Tensor<[1,32,1536]>, | ttnn.add | aten::add.Tensor | 4 |

| 248 | Tensor<[32,4608]>, Tensor<[32,4608]>, | ttnn.add | aten::add.Tensor | 4 |

| 249 | Tensor<[1,16,32,32]>, Tensor<[1,16,32,32]>, | ttnn.add | aten::add.Tensor | 4 |

| 250 | Tensor<[32,1536]>, Tensor<[32,1536]>, | ttnn.add | aten::add.Tensor | 4 |

| 251 | Tensor<[32,6144]>, Tensor<[32,6144]>, | ttnn.add | aten::add.Tensor | 4 |

| 252 | Tensor<[1,32,6144]>, Tensor<[1,32,6144]>, | ttnn.add | aten::add.Tensor | 4 |

| 253 | Tensor<[16,32,32]>, Tensor<[16,32,32]>, | ttnn.add | aten::baddbmm | 4 |

| 254 | Tensor<[1,16,768]>, Tensor<[1,16,768]>, | ttnn.add | aten::add.Tensor | 5 |

| 255 | Tensor<[1,16,1]>, Tensor<[1,16,1]>, | ttnn.add | aten::add.Tensor | 4 |

| 256 | Tensor<[16,768]>, Tensor<[16,768]>, | ttnn.add | aten::add.Tensor | 4 |

| 257 | Tensor<[1,12,16,16]>, Tensor<[1,12,16,16]>, | ttnn.add | aten::add.Tensor | 4 |

| 258 | Tensor<[16,3072]>, Tensor<[16,3072]>, | ttnn.add | aten::add.Tensor | 4 |

| 259 | Tensor<[1,16,3072]>, Tensor<[1,16,3072]>, | ttnn.add | aten::gelu | 4 |

| 260 | Tensor<[1,64,224,224]>, Tensor<[1,64,224,224]>, | ttnn.add | aten::add.Tensor | 4 |

| 261 | Tensor<[1,128,112,112]>, Tensor<[1,128,112,112]>, | ttnn.add | aten::add.Tensor | 4 |

| 262 | Tensor<[1,1,224,224]>, Tensor<[1,1,224,224]>, | ttnn.add | aten::convolution | 4 |

| 263 | Tensor<[1,19200,1]>, Tensor<[1,19200,1]>, | ttnn.add | aten::add.Tensor | 4 |

| 264 | Tensor<[1,19200,64]>, Tensor<[1,19200,64]>, | ttnn.add | aten::add.Tensor | 4 |

| 265 | Tensor<[19200,64]>, Tensor<[19200,64]>, | ttnn.add | aten::add.Tensor | 4 |

| 266 | Tensor<[1,300,1]>, Tensor<[1,300,1]>, | ttnn.add | aten::add.Tensor | 4 |

| 267 | Tensor<[1,300,64]>, Tensor<[1,300,64]>, | ttnn.add | aten::add.Tensor | 4 |

| 268 | Tensor<[300,64]>, Tensor<[300,64]>, | ttnn.add | aten::add.Tensor | 4 |

| 269 | Tensor<[19200,256]>, Tensor<[19200,256]>, | ttnn.add | aten::add.Tensor | 4 |

| 270 | Tensor<[1,4800,1]>, Tensor<[1,4800,1]>, | ttnn.add | aten::add.Tensor | 4 |

| 271 | Tensor<[1,4800,128]>, Tensor<[1,4800,128]>, | ttnn.add | aten::add.Tensor | 4 |

| 272 | Tensor<[4800,128]>, Tensor<[4800,128]>, | ttnn.add | aten::add.Tensor | 4 |

| 273 | Tensor<[1,300,128]>, Tensor<[1,300,128]>, | ttnn.add | aten::add.Tensor | 4 |

| 274 | Tensor<[300,128]>, Tensor<[300,128]>, | ttnn.add | aten::add.Tensor | 4 |

| 275 | Tensor<[4800,512]>, Tensor<[4800,512]>, | ttnn.add | aten::add.Tensor | 4 |

| 276 | Tensor<[1,1200,1]>, Tensor<[1,1200,1]>, | ttnn.add | aten::add.Tensor | 4 |

| 277 | Tensor<[1,1200,320]>, Tensor<[1,1200,320]>, | ttnn.add | aten::add.Tensor | 4 |

| 278 | Tensor<[1200,320]>, Tensor<[1200,320]>, | ttnn.add | aten::add.Tensor | 4 |

| 279 | Tensor<[1,300,320]>, Tensor<[1,300,320]>, | ttnn.add | aten::add.Tensor | 4 |

| 280 | Tensor<[300,320]>, Tensor<[300,320]>, | ttnn.add | aten::add.Tensor | 4 |

| 281 | Tensor<[1200,1280]>, Tensor<[1200,1280]>, | ttnn.add | aten::add.Tensor | 4 |

| 282 | Tensor<[1,300,512]>, Tensor<[1,300,512]>, | ttnn.add | aten::add.Tensor | 4 |

| 283 | Tensor<[300,512]>, Tensor<[300,512]>, | ttnn.add | aten::add.Tensor | 4 |

| 284 | Tensor<[300,2048]>, Tensor<[300,2048]>, | ttnn.add | aten::add.Tensor | 4 |

| 285 | Tensor<[30]>, Tensor<[30]>, | ttnn.add | aten::add.Tensor | 4 |

| 286 | Tensor<[30,1]>, Tensor<[30,1]>, | ttnn.add | aten::add.Tensor | 4 |

| 287 | Tensor<[1,64,30,40]>, Tensor<[1,64,30,40]>, | ttnn.add | aten::add.Tensor | 5 |

| 288 | Tensor<[1,32,30,40]>, Tensor<[1,32,30,40]>, | ttnn.add | aten::add.Tensor | 4 |

| 289 | Tensor<[60]>, Tensor<[60]>, | ttnn.add | aten::add.Tensor | 4 |

| 290 | Tensor<[60,1]>, Tensor<[60,1]>, | ttnn.add | aten::add.Tensor | 4 |

| 291 | Tensor<[80]>, Tensor<[80]>, | ttnn.add | aten::add.Tensor | 4 |

| 292 | Tensor<[1,64,60,80]>, Tensor<[1,64,60,80]>, | ttnn.add | aten::add.Tensor | 5 |

| 293 | Tensor<[1,32,60,80]>, Tensor<[1,32,60,80]>, | ttnn.add | aten::add.Tensor | 4 |

| 294 | Tensor<[120]>, Tensor<[120]>, | ttnn.add | aten::add.Tensor | 4 |

| 295 | Tensor<[120,1]>, Tensor<[120,1]>, | ttnn.add | aten::add.Tensor | 4 |

| 296 | Tensor<[1,64,120,160]>, Tensor<[1,64,120,160]>, | ttnn.add | aten::add.Tensor | 5 |

| 297 | Tensor<[1,32,120,160]>, Tensor<[1,32,120,160]>, | ttnn.add | aten::add.Tensor | 4 |

| 298 | Tensor<[240]>, Tensor<[240]>, | ttnn.add | aten::add.Tensor | 4 |

| 299 | Tensor<[240,1]>, Tensor<[240,1]>, | ttnn.add | aten::add.Tensor | 4 |

| 300 | Tensor<[1,64,240,320]>, Tensor<[1,64,240,320]>, | ttnn.add | aten::add.Tensor | 5 |

| 301 | Tensor<[480]>, Tensor<[480]>, | ttnn.add | aten::add.Tensor | 4 |

| 302 | Tensor<[480,1]>, Tensor<[480,1]>, | ttnn.add | aten::add.Tensor | 4 |

| 303 | Tensor<[1,64,480,640]>, Tensor<[1,64,480,640]>, | ttnn.add | aten::add.Tensor | 5 |

| 304 | Tensor<[1,64,15,20]>, Tensor<[1,64,15,20]>, | ttnn.add | aten::convolution | 4 |

| 305 | Tensor<[1,256,120,160]>, Tensor<[1,256,120,160]>, | ttnn.add | aten::convolution | 4 |

| 306 | Tensor<[1,128,60,80]>, Tensor<[1,128,60,80]>, | ttnn.add | aten::convolution | 4 |

| 307 | Tensor<[1,128,15,20]>, Tensor<[1,128,15,20]>, | ttnn.add | aten::convolution | 4 |

| 308 | Tensor<[1,512,60,80]>, Tensor<[1,512,60,80]>, | ttnn.add | aten::convolution | 4 |

| 309 | Tensor<[1,320,30,40]>, Tensor<[1,320,30,40]>, | ttnn.add | aten::convolution | 4 |

| 310 | Tensor<[1,320,15,20]>, Tensor<[1,320,15,20]>, | ttnn.add | aten::convolution | 4 |

| 311 | Tensor<[1,1280,30,40]>, Tensor<[1,1280,30,40]>, | ttnn.add | aten::convolution | 4 |

| 312 | Tensor<[1,512,15,20]>, Tensor<[1,512,15,20]>, | ttnn.add | aten::convolution | 4 |

| 313 | Tensor<[1,2048,15,20]>, Tensor<[1,2048,15,20]>, | ttnn.add | aten::convolution | 4 |

| 314 | Tensor<[1,2,30,40]>, Tensor<[1,2,30,40]>, | ttnn.add | aten::convolution | 4 |

| 315 | Tensor<[1,2,60,80]>, Tensor<[1,2,60,80]>, | ttnn.add | aten::convolution | 4 |

| 316 | Tensor<[1,2,120,160]>, Tensor<[1,2,120,160]>, | ttnn.add | aten::convolution | 4 |

| 317 | Tensor<[1,1,480,640]>, Tensor<[1,1,480,640]>, | ttnn.add | aten::convolution | 4 |

| 318 | Tensor<[1,19200,256]>, Tensor<[1,19200,256]>, | ttnn.add | aten::gelu | 4 |

| 319 | Tensor<[1,4800,512]>, Tensor<[1,4800,512]>, | ttnn.add | aten::gelu | 4 |