Introduction

Welcome to tt-mlir, Tenstorrent's open-source MLIR-based compiler infrastructure designed to optimize and deploy machine learning models and other computational workloads on Tenstorrent hardware. This documentation provides an overview of the key components, features, and usage of tt-mlir.

Architecture & Dialect Overview

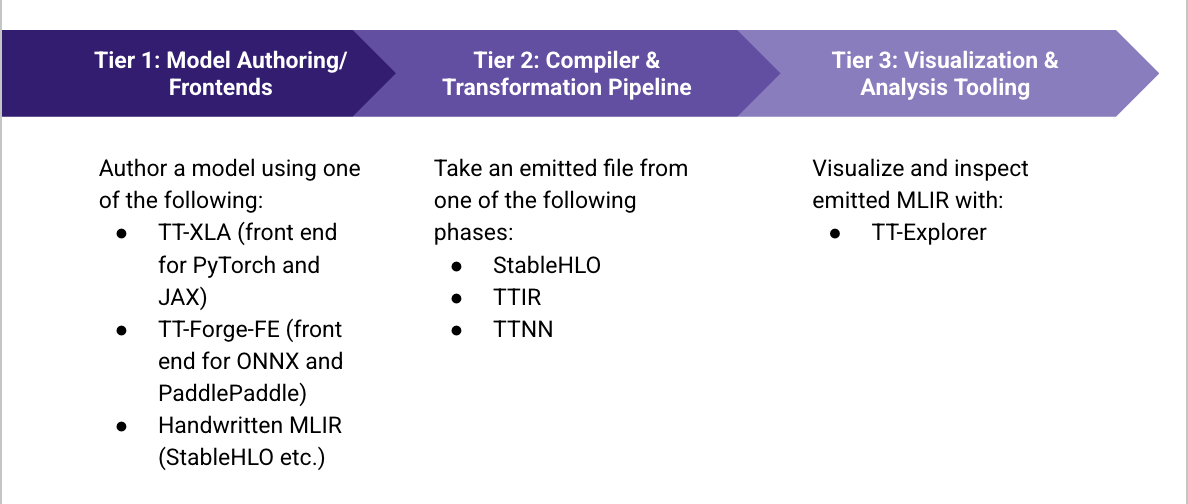

tt-mlir is structured around several core dialects and components that facilitate the compilation process from high-level representations to low-level code generation. While the architecture diagram below illustrates Tenstorrent’s compiler flow, it also reflects the various dialect abstractions defined within tt-mlir.

tt-mlir is a library of reusable components that can be used to build compilers targeting Tenstorrent hardware. Other compiler technologies may choose to leverage whichever abstractions best suit their needs.

// TTIR: Named ops on tensors (akin to shlo, tosa, etc)

//

// This should be the default IR that users who need a higher-level abstraction

// over tensors.

//

// Example IR:

func.func @simple_linear(

%arg0: tensor<64x128xbf16>,

%arg1: tensor<128x64xbf16>,

%bias: tensor<64x64xbf16>) -> tensor<64x64xbf16> {

%0 = ttir.empty() : tensor<64x64xbf16>

%1 = "ttir.linear"(%arg0, %arg1, %bias, %0) : (tensor<64x128xbf16>, tensor<128x64xbf16>, tensor<64x64xbf16>, tensor<64x64xbf16>) -> tensor<64x64xbf16>

return %1 : tensor<64x64xbf16>

}

// TTNN: Named ops on tensors (akin to shlo, tosa, etc)

//

// The TTNN dialect models the tt-nn API (from the tt-metalium project)

// as closely as possible. It is intended to be a high-level IR over tensors.

//

// Example IR:

#ttnn_layout = #ttnn.ttnn_layout<(d0, d1) -> (d0, d1), <1x1>, memref<2x4x!ttcore.tile<32x32, bf16>, #dram>, <interleaved>>

#ttnn_layout1 = #ttnn.ttnn_layout<(d0, d1) -> (d0, d1), <1x1>, memref<4x2x!ttcore.tile<32x32, bf16>, #dram>, <interleaved>>

#ttnn_layout2 = #ttnn.ttnn_layout<(d0, d1) -> (d0, d1), <1x1>, memref<2x2x!ttcore.tile<32x32, bf16>, #dram>, <interleaved>>

func.func @simple_linear(

%arg0: tensor<64x128xbf16, #ttnn_layout>,

%arg1: tensor<128x64xbf16, #ttnn_layout1>,

%arg2: tensor<64x64xbf16, #ttnn_layout2>

) -> tensor<64x64xbf16, #ttnn_layout2> {

%0 = "ttnn.linear"(%arg0, %arg1, %arg2) <{

transpose_a = false,

transpose_b = false

}> : (tensor<64x128xbf16, #ttnn_layout>, tensor<128x64xbf16, #ttnn_layout1>, tensor<64x64xbf16, #ttnn_layout2>) -> tensor<64x64xbf16, #ttnn_layout2>

"ttnn.deallocate"(%arg2) <{force = false}> : (tensor<64x64xbf16, #ttnn_layout2>) -> ()

"ttnn.deallocate"(%arg1) <{force = false}> : (tensor<128x64xbf16, #ttnn_layout1>) -> ()

"ttnn.deallocate"(%arg0) <{force = false}> : (tensor<64x128xbf16, #ttnn_layout>) -> ()

return %0 : tensor<64x64xbf16, #ttnn_layout2>

}

// D2M: Generic compute dialect (akin to linalg)

//

// The D2M dialect models generic compute on tensors and memrefs,

// similar to linalg.generic, but with constructs that map well to

// the Tenstorrent execution model (e.g., sharded tensors, grids,

// explicit datamovement).

//

// Example IR:

#layout = #ttcore.metal_layout<logical_shape = 64x128, dim_alignments = 32x32, collapsed_intervals = dense<[[0, 1], [1, 2]]> : tensor<2x2xi64>, undef, l1>

#layout1 = #ttcore.metal_layout<logical_shape = 128x96, dim_alignments = 32x32, collapsed_intervals = dense<[[0, 1], [1, 2]]> : tensor<2x2xi64>, undef, l1>

#layout2 = #ttcore.metal_layout<logical_shape = 64x96, dim_alignments = 32x32, collapsed_intervals = dense<[[0, 1], [1, 2]]> : tensor<2x2xi64>, undef, l1>

#map = affine_map<(d0, d1, d2) -> (d0, d2)>

#map1 = affine_map<(d0, d1, d2) -> (d2, d1)>

#map2 = affine_map<(d0, d1, d2) -> (d0, d1)>

#parallel = #ttcore.iterator_type

#reduction = #ttcore.iterator_type

func.func @simple_matmul(

%arg0: tensor<64x128xbf16>,

%arg1: tensor<128x96xbf16>

) -> tensor<64x96xbf16> {

%0 = d2m.empty() : tensor<64x96xbf16>

%1 = d2m.empty() : tensor<1x1x2x4x!ttcore.tile<32x32, bf16>, #layout>

%2 = d2m.to_layout %arg0, %1 : tensor<64x128xbf16> into tensor<1x1x2x4x!ttcore.tile<32x32, bf16>, #layout> -> tensor<1x1x2x4x!ttcore.tile<32x32, bf16>, #layout>

%3 = d2m.empty() : tensor<1x1x4x3x!ttcore.tile<32x32, bf16>, #layout1>

%4 = d2m.to_layout %arg1, %3 : tensor<128x96xbf16> into tensor<1x1x4x3x!ttcore.tile<32x32, bf16>, #layout1> -> tensor<1x1x4x3x!ttcore.tile<32x32, bf16>, #layout1>

%5 = d2m.empty() : tensor<1x1x2x3x!ttcore.tile<32x32, bf16>, #layout2>

%6 = d2m.to_layout %0, %5 : tensor<64x96xbf16> into tensor<1x1x2x3x!ttcore.tile<32x32, bf16>, #layout2> -> tensor<1x1x2x3x!ttcore.tile<32x32, bf16>, #layout2>

%7 = d2m.generic {

block_factors = [1, 1, 1],

grid = #ttcore.grid<1x1>,

indexing_maps = [#map, #map1, #map2],

iterator_types = [#parallel, #parallel, #reduction],

threads = [#d2m.thread]

} ins(%2, %4 : tensor<1x1x2x4x!ttcore.tile<32x32, bf16>, #layout>, tensor<1x1x4x3x!ttcore.tile<32x32, bf16>, #layout1>)

outs(%6 : tensor<1x1x2x3x!ttcore.tile<32x32, bf16>, #layout2>) {

^compute0(%cb0: !d2m.cb>>, %cb1: !d2m.cb>>, %cb2: !d2m.cb>>):

%10 = d2m.wait %cb0 : >> -> tensor<2x4x!ttcore.tile<32x32, bf16>>

%11 = d2m.wait %cb1 : >> -> tensor<4x3x!ttcore.tile<32x32, bf16>>

%12 = d2m.reserve %cb2 : >> -> tensor<2x3x!ttcore.tile<32x32, bf16>>

%13 = linalg.generic {

indexing_maps = [#map, #map1, #map2],

iterator_types = ["parallel", "parallel", "reduction"]

} ins(%10, %11 : tensor<2x4x!ttcore.tile<32x32, bf16>>, tensor<4x3x!ttcore.tile<32x32, bf16>>) outs(%12 : tensor<2x3x!ttcore.tile<32x32, bf16>>) {

^bb0(%in: !ttcore.tile<32x32, bf16>, %in_0: !ttcore.tile<32x32, bf16>, %out: !ttcore.tile<32x32, bf16>):

%14 = "d2m.tile_matmul"(%in, %in_0, %out) : (!ttcore.tile<32x32, bf16>, !ttcore.tile<32x32, bf16>, !ttcore.tile<32x32, bf16>) -> !ttcore.tile<32x32, bf16>

linalg.yield %14 : !ttcore.tile<32x32, bf16>

} -> tensor<2x3x!ttcore.tile<32x32, bf16>>

d2m.yield %13 : (tensor<2x3x!ttcore.tile<32x32, bf16>>)

} : tensor<1x1x2x3x!ttcore.tile<32x32, bf16>, #layout2>

%8 = d2m.empty() : tensor<64x96xbf16>

%9 = d2m.to_layout %7, %8 : tensor<1x1x2x3x!ttcore.tile<32x32, bf16>, #layout2> into tensor<64x96xbf16> -> tensor<64x96xbf16>

return %9 : tensor<64x96xbf16>

}

// TTKernel: tt-metal device kernel dialect.

//

// The TTKernel dialect models low-level kernels that run on Tenstorrent

// devices. It exposes concepts such as circular buffers, tile registers,

// noc transactions and explicit synchronization. It is intended to be a

// 1-1 mapping to tt-metalium kernels.

//

// Example Datamovement Kernel IR:

func.func private @datamovement_kernel() attributes {

ttkernel.arg_spec = #ttkernel.arg_spec<ct_args = [

<arg_type = cb_port, operand_index = 0>,

<arg_type = cb_port, operand_index = 1>

]>,

ttkernel.thread = #ttkernel.thread<noc>

} {

%0 = ttkernel.get_compile_time_arg_val(0) : () -> !ttkernel.cb<1, !ttcore.tile<32x32, bf16>>

%1 = ttkernel.get_compile_time_arg_val(1) : () -> !ttkernel.cb<1024, bf16>

%c1_i32 = arith.constant 1 : i32

ttkernel.cb_reserve_back(%0, %c1_i32) : (!ttkernel.cb<1, !ttcore.tile<32x32, bf16>>, i32) -> ()

ttkernel.cb_push_back(%0, %c1_i32) : (!ttkernel.cb<1, !ttcore.tile<32x32, bf16>>, i32) -> ()

return

}

//

// Example Compute Kernel IR:

func.func private @compute_kernel8() attributes {

ttkernel.arg_spec = #ttkernel.arg_spec<ct_args = [

<arg_type = cb_port, operand_index = 0>,

<arg_type = cb_port, operand_index = 1>,

<arg_type = cb_port, operand_index = 2>

]>,

ttkernel.thread = #ttkernel.thread<compute>

} {

%0 = ttkernel.get_compile_time_arg_val(0) : () -> !ttkernel.cb<1, !ttcore.tile<32x32, bf16>>

%1 = ttkernel.get_compile_time_arg_val(1) : () -> !ttkernel.cb<1, !ttcore.tile<32x32, bf16>>

%2 = ttkernel.get_compile_time_arg_val(2) : () -> !ttkernel.cb<1, !ttcore.tile<32x32, bf16>>

%c0 = arith.constant 0 : index

%c1_i32 = arith.constant 1 : i32

%c1_i32_0 = arith.constant 1 : i32

%c1_i32_1 = arith.constant 1 : i32

%c1_i32_2 = arith.constant 1 : i32

%c0_i32 = arith.constant 0 : i32

"ttkernel.mm_block_init"(%0, %1, %2, %c0_i32, %c1_i32_1, %c1_i32, %c1_i32_0) : (!ttkernel.cb<1, !ttcore.tile<32x32, bf16>>, !ttkernel.cb<1, !ttcore.tile<32x32, bf16>>, !ttkernel.cb<1, !ttcore.tile<32x32, bf16>>, i32, i32, i32, i32) -> ()

%c4 = arith.constant 4 : index

%c1 = arith.constant 1 : index

%c0_3 = arith.constant 0 : index

scf.for %arg0 = %c0_3 to %c4 step %c1 {

%c1_i32_4 = arith.constant 1 : i32

ttkernel.cb_wait_front(%0, %c1_i32_4) : (!ttkernel.cb<1, !ttcore.tile<32x32, bf16>>, i32) -> ()

%c1_i32_5 = arith.constant 1 : i32

ttkernel.cb_wait_front(%1, %c1_i32_5) : (!ttkernel.cb<1, !ttcore.tile<32x32, bf16>>, i32) -> ()

%c1_i32_6 = arith.constant 1 : i32

ttkernel.cb_reserve_back(%2, %c1_i32_6) : (!ttkernel.cb<1, !ttcore.tile<32x32, bf16>>, i32) -> ()

ttkernel.tile_regs_acquire() : () -> ()

%3 = arith.cmpi ne, %arg0, %c0_3 : index

scf.if %3 {

ttkernel.copy_tile_init(%2) : (!ttkernel.cb<1, !ttcore.tile<32x32, bf16>>) -> ()

ttkernel.copy_tile(%2, %c0_3, %c0_3) : (!ttkernel.cb<1, !ttcore.tile<32x32, bf16>>, index, index) -> ()

}

"ttkernel.mm_block_init_short"(%0, %1, %c0_i32, %c1_i32_1, %c1_i32, %c1_i32_0) : (!ttkernel.cb<1, !ttcore.tile<32x32, bf16>>, !ttkernel.cb<1, !ttcore.tile<32x32, bf16>>, i32, i32, i32, i32) -> ()

%c0_7 = arith.constant 0 : index

%c0_8 = arith.constant 0 : index

"ttkernel.experimental::matmul_block"(%0, %1, %c0_7, %c0_8, %c0, %c0_i32, %c1_i32_1, %c1_i32, %c1_i32_0, %c1_i32_2) : (!ttkernel.cb<1, !ttcore.tile<32x32, bf16>>, !ttkernel.cb<1, !ttcore.tile<32x32, bf16>>, index, index, index, i32, i32, i32, i32, i32) -> ()

ttkernel.pack_tile(%c0_3, %2, %c0_3, true) : (index, !ttkernel.cb<1, !ttcore.tile<32x32, bf16>>, index) -> ()

ttkernel.cb_pop_front(%0, %c1_i32_4) : (!ttkernel.cb<1, !ttcore.tile<32x32, bf16>>, i32) -> ()

ttkernel.cb_pop_front(%1, %c1_i32_5) : (!ttkernel.cb<1, !ttcore.tile<32x32, bf16>>, i32) -> ()

ttkernel.cb_push_back(%2, %c1_i32_6) : (!ttkernel.cb<1, !ttcore.tile<32x32, bf16>>, i32) -> ()

%c1_i32_4 = arith.constant 1 : i32

ttkernel.cb_wait_front(%2, %c1_i32_4) : (!ttkernel.cb<1, !ttcore.tile<32x32, bf16>>, i32) -> ()

ttkernel.cb_pop_front(%2, %c1_i32_4) : (!ttkernel.cb<1, !ttcore.tile<32x32, bf16>>, i32) -> ()

}

return

}

// TTMetal: tt-metal host/device interop dialect.

//

// The TTMetal dialect models host-side operations for managing Tenstorrent

// devices, such as buffer allocation, data transfer, and enqueueing programs.

//

// Example IR:

func.func @simple_matmul(

%arg0: memref<64x128xbf16>,

%arg1: memref<128x96xbf16>

) -> memref<64x96xbf16> {

%0 = "ttmetal.create_buffer"() <{address = 13312 : i64}> : () -> memref<2x4x1x1x!ttcore.tile<32x32, bf16>, #ttcore.shard<2048x2048>, #ttcore.memory_space<l1>>

%1 = "ttmetal.create_buffer"() <{address = 1024 : i64}> : () -> memref<2x4x32x32xbf16, #ttcore.shard<64x2>, #ttcore.memory_space<l1>>

"ttmetal.enqueue_write_buffer"(%arg0, %1) : (memref<64x128xbf16>, memref<2x4x32x32xbf16, #ttcore.shard<64x2>, #ttcore.memory_space<l1>>) -> ()

"ttmetal.enqueue_program"(%1, %0, %1, %0) <{

cb_ports = array<i64: 0, 1>,

kernelConfigs = [

#ttmetal.noc_config<@datamovement_kernel0, #ttmetal.core_range<0x0, 2x4>, #ttmetal.kernel_args<ct_args = [<cb_port[0]>, <cb_port[1]>]>, noc0>,

#ttmetal.noc_config<@datamovement_kernel1, #ttmetal.core_range<0x0, 2x4>, #ttmetal.kernel_args< ct_args = [<cb_port[0]>, <cb_port[1]>]>, noc1>,

#ttmetal.compute_config<@compute_kernel2, #ttmetal.core_range<0x0, 2x4>, #ttmetal.kernel_args< ct_args = [<cb_port[0]>, <cb_port[1]>]>, hifi4, false, false, false, [default]>

],

operandSegmentSizes = array<i32: 2, 2>

}> : (memref<2x4x32x32xbf16, #ttcore.shard<64x2>, #ttcore.memory_space<l1>>, memref<2x4x1x1x!ttcore.tile<32x32, bf16>, #ttcore.shard<2048x2048>, #ttcore.memory_space<l1>>, memref<2x4x32x32xbf16, #ttcore.shard<64x2>, #ttcore.memory_space<l1>>, memref<2x4x1x1x!ttcore.tile<32x32, bf16>, #ttcore.shard<2048x2048>, #ttcore.memory_space<l1>>) -> ()

"ttmetal.deallocate_buffer"(%1) : (memref<2x4x32x32xbf16, #ttcore.shard<64x2>, #ttcore.memory_space<l1>>) -> ()

%2 = "ttmetal.create_buffer"() <{address = 11264 : i64}> : () -> memref<4x3x1x1x!ttcore.tile<32x32, bf16>, #ttcore.shard<2048x2048>, #ttcore.memory_space<l1>>

%3 = "ttmetal.create_buffer"() <{address = 1024 : i64}> : () -> memref<4x3x32x32xbf16, #ttcore.shard<64x2>, #ttcore.memory_space<l1>>

"ttmetal.enqueue_write_buffer"(%arg1, %3) : (memref<128x96xbf16>, memref<4x3x32x32xbf16, #ttcore.shard<64x2>, #ttcore.memory_space<l1>>) -> ()

"ttmetal.enqueue_program"(%3, %2, %3, %2) <{

cb_ports = array<i64: 0, 1>,

kernelConfigs = [

#ttmetal.noc_config<@datamovement_kernel3, #ttmetal.core_range<0x0, 4x3>,#ttmetal.kernel_args< ct_args = [<cb_port[0]>, <cb_port[1]>]>, noc0>,

#ttmetal.noc_config<@datamovement_kernel4, #ttmetal.core_range<0x0, 4x3>, #ttmetal.kernel_args< ct_args = [<cb_port[0]>, <cb_port[1]>]>, noc1>,

#ttmetal.compute_config<@compute_kernel5, #ttmetal.core_range<0x0, 4x3>, #ttmetal.kernel_args< ct_args = [<cb_port[0]>, <cb_port[1]>]>, hifi4, false, false, false, [default]>

],

operandSegmentSizes = array<i32: 2, 2>

}> : (memref<4x3x32x32xbf16, #ttcore.shard<64x2>, #ttcore.memory_space<l1>>, memref<4x3x1x1x!ttcore.tile<32x32, bf16>, #ttcore.shard<2048x2048>, #ttcore.memory_space<l1>>, memref<4x3x32x32xbf16, #ttcore.shard<64x2>, #ttcore.memory_space<l1>>, memref<4x3x1x1x!ttcore.tile<32x32, bf16>, #ttcore.shard<2048x2048>, #ttcore.memory_space<l1>>) -> ()

"ttmetal.deallocate_buffer"(%3) : (memref<4x3x32x32xbf16, #ttcore.shard<64x2>, #ttcore.memory_space<l1>>) -> ()

%4 = "ttmetal.create_buffer"() <{address = 9216 : i64}> : () -> memref<2x3x1x1x!ttcore.tile<32x32, bf16>, #ttcore.shard<2048x2048>, #ttcore.memory_space<l1>>

%5 = "ttmetal.create_buffer"() <{address = 1024 : i64}> : () -> memref<2x4x1x1x!ttcore.tile<32x32, bf16>, #ttcore.shard<2048x2048, 2>, #ttcore.memory_space<l1>>

"ttmetal.enqueue_program"(%0, %2, %4, %5, %6, %4) <{

cb_ports = array<i64: 0, 1, 2>,

kernelConfigs = [

#ttmetal.noc_config<@datamovement_kernel6, #ttmetal.core_range<0x0, 2x3>, #ttmetal.kernel_args< ct_args = [<cb_port[0]>, <cb_port[1]>, <cb_port[2]>, <semaphore[0]>, <semaphore[1]>, <semaphore[2]>, <semaphore[3]>, <buffer_address[0]>]>, noc0>,

#ttmetal.noc_config<@datamovement_kernel7, #ttmetal.core_range<0x0, 2x3>, #ttmetal.kernel_args< ct_args = [<cb_port[0]>, <cb_port[1]>, <cb_port[2]>, <semaphore[0]>, <semaphore[1]>, <semaphore[2]>, <semaphore[3]>, <buffer_address[1]>]>, noc1>,

#ttmetal.compute_config<@compute_kernel8, #ttmetal.core_range<0x0, 2x3>, #ttmetal.kernel_args< ct_args = [<cb_port[0]>, <cb_port[1]>, <cb_port[2]>]>, hifi4, false, false, false, [default]>

],

operandSegmentSizes = array<i32: 3, 3>

}> : (memref<2x4x1x1x!ttcore.tile<32x32, bf16>, #ttcore.shard<2048x2048>, #ttcore.memory_space<l1>>, memref<4x3x1x1x!ttcore.tile<32x32, bf16>, #ttcore.shard<2048x2048>, #ttcore.memory_space<l1>>, memref<2x3x1x1x!ttcore.tile<32x32, bf16>, #ttcore.shard<2048x2048>, #ttcore.memory_space<l1>>, memref<2x4x1x1x!ttcore.tile<32x32, bf16>, #ttcore.shard<2048x2048, 2>, #ttcore.memory_space<l1>>, memref<4x3x1x1x!ttcore.tile<32x32, bf16>, #ttcore.shard<2048x2048, 2>, #ttcore.memory_space<l1>>, memref<2x3x1x1x!ttcore.tile<32x32, bf16>, #ttcore.shard<2048x2048>, #ttcore.memory_space<l1>>) -> ()

"ttmetal.deallocate_buffer"(%6) : (memref<4x3x1x1x!ttcore.tile<32x32, bf16>, #ttcore.shard<2048x2048, 2>, #ttcore.memory_space<l1>>) -> ()

"ttmetal.deallocate_buffer"(%2) : (memref<4x3x1x1x!ttcore.tile<32x32, bf16>, #ttcore.shard<2048x2048>, #ttcore.memory_space<l1>>) -> ()

"ttmetal.deallocate_buffer"(%5) : (memref<2x4x1x1x!ttcore.tile<32x32, bf16>, #ttcore.shard<2048x2048, 2>, #ttcore.memory_space<l1>>) -> ()

"ttmetal.deallocate_buffer"(%0) : (memref<2x4x1x1x!ttcore.tile<32x32, bf16>, #ttcore.shard<2048x2048>, #ttcore.memory_space<l1>>) -> ()

%alloc = memref.alloc() : memref<64x96xbf16>

%7 = "ttmetal.create_buffer"() <{address = 1024 : i64}> : () -> memref<2x3x32x32xbf16, #ttcore.shard<64x2>, #ttcore.memory_space<l1>>

"ttmetal.enqueue_program"(%4, %7, %4, %7) <{

cb_ports = array<i64: 0, 1>,

kernelConfigs = [

#ttmetal.noc_config<@datamovement_kernel9, #ttmetal.core_range<0x0, 2x3>, #ttmetal.kernel_args< ct_args = [<cb_port[0]>, <cb_port[1]>]>, noc0>,

#ttmetal.noc_config<@datamovement_kernel10, #ttmetal.core_range<0x0, 2x3>, #ttmetal.kernel_args< ct_args = [<cb_port[0]>, <cb_port[1]>]>, noc1>,

#ttmetal.compute_config<@compute_kernel11, #ttmetal.core_range<0x0, 2x3>, #ttmetal.kernel_args< ct_args = [<cb_port[0]>, <cb_port[1]>]>, hifi4, false, false, false, [default]>

],

operandSegmentSizes = array<i32: 2, 2>

}> : (memref<2x3x1x1x!ttcore.tile<32x32, bf16>, #ttcore.shard<2048x2048>, #ttcore.memory_space<l1>>, memref<2x3x32x32xbf16, #ttcore.shard<64x2>, #ttcore.memory_space<l1>>, memref<2x3x1x1x!ttcore.tile<32x32, bf16>, #ttcore.shard<2048x2048>, #ttcore.memory_space<l1>>, memref<2x3x32x32xbf16, #ttcore.shard<64x2>, #ttcore.memory_space<l1>>) -> ()

"ttmetal.deallocate_buffer"(%4) : (memref<2x3x1x1x!ttcore.tile<32x32, bf16>, #ttcore.shard<2048x2048>, #ttcore.memory_space<l1>>) -> ()

"ttmetal.enqueue_read_buffer"(%7, %alloc) : (memref<2x3x32x32xbf16, #ttcore.shard<64x2>, #ttcore.memory_space<l1>>, memref<64x96xbf16>) -> ()

"ttmetal.finish"() : () -> ()

"ttmetal.deallocate_buffer"(%7) : (memref<2x3x32x32xbf16, #ttcore.shard<64x2>, #ttcore.memory_space<l1>>) -> ()

return %alloc : memref<64x96xbf16>

}

Related Tenstorrent Projects

Getting Started

This page walks you through the steps required to set up tt-mlir.

NOTE: If you have a build issue, you can file a bug here.

Prerequisites

Hardware Setup

Use this guide to set up your hardware - Hardware Setup.

System Dependencies

You can use tt-mlir with Ubuntu or Mac OS, however the runtime does not work on Mac OS. tt-mlir project has the following system dependencies:

- Ubuntu 22.04 OS or Mac OS

- Clang >= 14 & <= 18

- Ninja

- CMake 3.24 or higher

- Python 3.12

- python3.12-venv

Ubuntu

Install Clang, Ninja, CMake, and python3.12-venv:

sudo apt install git clang cmake ninja-build pip python3.12-venv

You should now have the required dependencies installed.

NOTE: If you intend to build with runtime enabled (

-DTTMLIR_ENABLE_RUNTIME=ON), you also need to install tt-metal dependencies which can be found here.

Full developer dependencies as packaged in our docker image:

apt-get update -qq

apt-get install -y -qq \

software-properties-common \

build-essential \

python3-pip \

git \

libhwloc-dev \

pandoc \

libtbb-dev \

libcapstone-dev \

pkg-config \

linux-tools-generic \

ninja-build \

wget \

libgtest-dev \

cmake \

ccache \

doxygen \

graphviz \

libyaml-cpp-dev \

libboost-all-dev \

curl \

jq \

sudo \

lcov \

zstd \

unzip

# Install Python 3.12

add-apt-repository ppa:deadsnakes/ppa -y

apt-get update -qq

apt-get install -y -qq python3.12 python3.12-dev python3.12-venv

# Setup / install metal dependencies

wget -q https://raw.githubusercontent.com/tenstorrent/tt-metal/${TT_METAL_DEPENDENCIES_COMMIT}/{install_dependencies.sh,tt_metal/sfpi-info.sh,tt_metal/sfpi-version}

chmod u+x sfpi-info.sh

bash install_dependencies.sh --docker

Mac OS

On MacOS we need to install the latest version of cmake, and ninja which can be done using Homebrew with (Docs for installing Homebrew: https://brew.sh).

brew install cmake ninja

Clone the tt-mlir Repo

- Clone the tt-mlir repo:

git clone https://github.com/tenstorrent/tt-mlir.git

- Navigate into the tt-mlir folder.

Environment Setup

There are two ways to set up the environment, either using a docker image or building the environment manually. The docker image is recommended on ubuntu since it is easier to set up and use.

Note: Docker path is only supported on ubuntu. For macos please use Setting up the Environment Manually.

Using a Docker Image

Please see Docker Notes for details on how to set up and use the docker image.

Once you have the docker image running and you are logged into the container, you should be ready to build.

Note: Docker path is only supported on ubuntu. For macos please use Setting up the Environment Manually.

Setting up the Environment Manually

This section explains how to manually build the environment so you can use tt-mlir. You only need to build this once, it builds llvm, flatbuffers, and a Python virtual environment. You can specify the LLVM build type by using -DLLVM_BUILD_TYPE=*. The default is MinSizeRel, and available options are listed here.

-

Navigate into the tt-mlir folder.

-

The environment gets installed into a toolchain directory, which is by default set to

/opt/ttmlir-toolchain, but can be overrideen by setting (and persisting in your environment) the environment variableTTMLIR_TOOLCHAIN_DIR. You need to manually create the toolchain directory as follows:

export TTMLIR_TOOLCHAIN_DIR=/opt/ttmlir-toolchain/

sudo mkdir -p "${TTMLIR_TOOLCHAIN_DIR}"

sudo chown -R "${USER}" "${TTMLIR_TOOLCHAIN_DIR}"

- Please ensure that you do not already have an environment (venv) activated before running the following commands:

cmake -B env/build env -DCMAKE_C_COMPILER=clang -DCMAKE_CXX_COMPILER=clang++

cmake --build env/build

source env/activate

NOTE: The last command takes time to run, so give it time to complete.

Building the tt-mlir Project

In this step, you build the tt-mlir project:

source env/activate

cmake -G Ninja -B build

cmake --build build

You have now configured tt-mlir.

You can add different flags to your build. Here are some options to consider:

- To enable the ttnn/metal runtime add

-DTTMLIR_ENABLE_RUNTIME=ON. Clang 17 is the minimum required version when enabling the runtime. - To enable the ttnn/metal perf runtime add

-DTT_RUNTIME_ENABLE_PERF_TRACE=ON. - To accelerate the builds with ccache use

-DCMAKE_CXX_COMPILER_LAUNCHER=ccache. - To workaround OOM issues it can be useful to decrease the number of parallel jobs with

-DCMAKE_BUILD_PARALLEL_LEVEL=4. - If Python bindings aren't required for your project, you can accelerate builds further with the command

-DTTMLIR_ENABLE_BINDINGS_PYTHON=OFF. - To enable

tt-exploreradd the-DTT_RUNTIME_ENABLE_PERF_TRACE=ON,-DTTMLIR_ENABLE_RUNTIME=ON, and-DTT_RUNTIME_DEBUG=ON. - To enable optimizer pass that uses the op model library, add

-DTTMLIR_ENABLE_OPMODEL=ON. - The TTNN build is automatically integrated / handled by the tt-mlir cmake build system. For debugging and further information regarding the TTNN backend build step, please refer to TTNN Documentation.

- The runtime build depends on the

TT_METAL_RUNTIME_ROOTvariable, which is also set inenv/activatescript. For more information, please refer to TT-NN and TT-Metailium installation documentation.

| OS | Offline Compiler Only | Runtime Enabled Build | Runtime + Perf Enabled Build |

|---|---|---|---|

| Ubuntu 22.04 | ✅ | ✅ | ✅ |

| Ubuntu 20.04 | ✅ | ❌ | ❌ |

| MacOS | ✅ | ❌ | ❌ |

Test the Build

Use this step to check your build. Do the following:

source env/activate

cmake --build build -- check-ttmlir

Lint

Set up lint so you can spot errors and stylistic issues before runtime:

source env/activate

cmake --build build -- clang-tidy

Note for developers: You can run:

source env/activate cmake --build build -- clang-tidy-ciThis reproduces the

Lint (clang-tidy)CI job. It runsclang-tidyonly on committed files that have been modified relative to theorigin/mainbranch.

Pre-Commit

Pre-Commit applies a git hook to the local repository such that linting is checked and applied on every git commit action. Install from the root of the repository using:

source env/activate

pre-commit install

If you have already committed before installing the pre-commit hooks, you can run on all files to "catch up":

pre-commit run --all-files

For more information visit pre-commit

Docs

Build the documentation by doing the following:

-

Make sure you have

mdbook,doxygen,sphinx, andsphinx-markdown-builderinstalled. -

Build the docs:

source env/activate

cmake --build build -- docs

mdbook serve build/docs

NOTE:

mdbook servewill by default create a local server athttp://localhost:3000.

For more information about building the docs please read the full guide on building the docs.

Common Build Errors

TTMLIRPythonCAPI target requires changing an RPATH

CMake Error at /opt/ttmlir-toolchain/lib/cmake/llvm/AddLLVM.cmake:594 (add_library):

The install of the TTMLIRPythonCAPI target requires changing an RPATH from

the build tree, but this is not supported with the Ninja generator unless

on an ELF-based or XCOFF-based platform. The

CMAKE_BUILD_WITH_INSTALL_RPATH variable may be set to avoid this relinking

step.

If you get the above error, it means you tried to build with an old version of cmake or ninja and there is a stale file. To fix this, rm -rf your build directory, install a newer version of cmake/ninja, and then rebuild. If you installed ninja via sudo apt install ninja-build, it might still be not up-to-date (v1.10.0). You may use ninja in the python virtual environment, or install it via pip3 install -U ninja, either way the version 1.11.1.git.kitware.jobserver-1 should work.

clang++ is not a full path and was not found in the PATH

CMake Error at CMakeLists.txt:2 (project):

The CMAKE_CXX_COMPILER:

clang++

is not a full path and was not found in the PATH.

Tell CMake where to find the compiler by setting either the environment

variable "CXX" or the CMake cache entry CMAKE_CXX_COMPILER to the full path

to the compiler, or to the compiler name if it is in the PATH.

CMake Error at CMakeLists.txt:2 (project):

The CMAKE_C_COMPILER:

clang

is not a full path and was not found in the PATH.

Tell CMake where to find the compiler by setting either the environment

variable "CC" or the CMake cache entry CMAKE_C_COMPILER to the full path to

the compiler, or to the compiler name if it is in the PATH.

If you get the following error, it means you need to install clang which you can do with sudo apt install clang on Ubuntu.

tt-metal Update Failures

Failed to unstash changes in: '/path/to/tt-metal/src/tt-metal'

You will have to resolve the conflicts manually

This error occurs during CMake's ExternalProject update of tt-metal. The build system tries to apply changes using Git's stash mechanism, but fails due to conflicts. This can happen even if you haven't manually modified any files, as the build process itself may leave behind artifacts or partial changes from previous builds.

To resolve, run the following command:

rm -rf third_party/tt-metal

Then retry your build command. If the error persists, you may need to do the following:

-

Remove the build directory:

rm -rf build -

Run CMake commands again.

-

Run the above.

Common Runtime Errors

Debugging Python on Mac OS

When debugging python on macOS via lldb you may see an error like:

(lldb) r

error: process exited with status -1 (attach failed (Not allowed to attach to process. Look in the console messages (Console.app), near the debugserver entries, when the attach failed. The subsystem that denied t

he attach permission will likely have logged an informative message about why it was denied.))

For preinstalled macOS binaries you must manually codesign with debug entitlements.

Create file debuggee-entitlement.xml:

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE plist PUBLIC "-//Apple//DTD PLIST 1.0//EN" "http://www.apple.com/DTDs/PropertyList-1.0.dtd">

<plist version="1.0">

<dict>

<key>com.apple.security.cs.disable-library-validation</key>

<true/>

<key>com.apple.security.get-task-allow</key>

<true/>

</dict>

</plist>

Sign the binary:

sudo codesign -f -s - --entitlements debuggee-entitlement.xml /opt/ttmlir-toolchain/venv/bin/python

Working with Docker Images

Components:

- Dockerfile

- Workflow for building Docker image

- Project build using Docker image

Overview

We use docker images to prepare the project environment, install dependencies, tooling and prebuild toolchain. Project builds four docker images:

- Base image

tt-mlir-base-ubuntu-22-04Dockerfile.base - CI image

tt-mlir-ci-ubuntu-22-04Dockerfile.ci - Base IRD image

tt-mlir-base-ird-ubuntu-22-04Dockerfile.ird - IRD image

tt-mlir-ird-ubuntu-22-04Dockerfile.ird

Base image starts with a supported base image (Ubuntu 22.04) and installs dependencies for project build. From there, we build the CI image that contains the prebuild toolchain and is used in CI to shorten the build time. The IRD image contains dev tools such as GDB, vim and ssh which are used in IRD environments.

During the CI Docker build, the project is built and tests are run to ensure that everything is set up correctly. If any dependencies are missing, the Docker build will fail.

Using the Docker Image

Here is a typical command to run the latest developer (ird) docker image:

sudo docker run -it -d --rm \

--name my-docker \

--cap-add ALL \

--device /dev/tenstorrent/0:/dev/tenstorrent/0 \

-v /dev/hugepages:/dev/hugepages \

-v /dev/hugepages-1G:/dev/hugepages-1G \

ghcr.io/tenstorrent/tt-mlir/tt-mlir-ird-ubuntu-22-04:latest bash

Special attention should be paid to flags:

--device /dev/tenstorrent/0:/dev/tenstorrent/0: this is required to map the hardware device into the container. For machines with multiple devices, this flag can be specified multiple times or adjusted with the appropriate device number.-v /dev/hugepages:/dev/hugepages/-v /dev/hugepages-1G:/dev/hugepages-1G: this is required to map the hugepages volume into the container. For more information on hugepages, please refer to the Getting Started Guide.The base or CI image can also be used in the same way, but the IRD image is recommended for development.

Using the Docker Image via IRD (Internal Developers Only)

Internally we use a tool called IRD. As part of your reserve command, you

can specify the docker image to use:

ird reserve \

--docker-image ghcr.io/tenstorrent/tt-mlir/tt-mlir-ird-ubuntu-22-04:latest

See ird reserve --help for more information on the reserve command. Typical

ird usage might look like:

# list machine availability

ird list-machines

# reserve a machine

ird reserve \

--volumes /localdev/$USER:/localdev/$USER \

--docker-image ghcr.io/tenstorrent/tt-mlir/tt-mlir-ird-ubuntu-22-04:latest \

--timeout 720 \

wormhole_b0 \

--machine [MACHINE_NAME]

# list your currently reserved machines

ird list

# connect to the first reserved machine

ird connect-to 1

# release the first reserved machine

ird release 1

Building the Docker Image using GitHub Actions

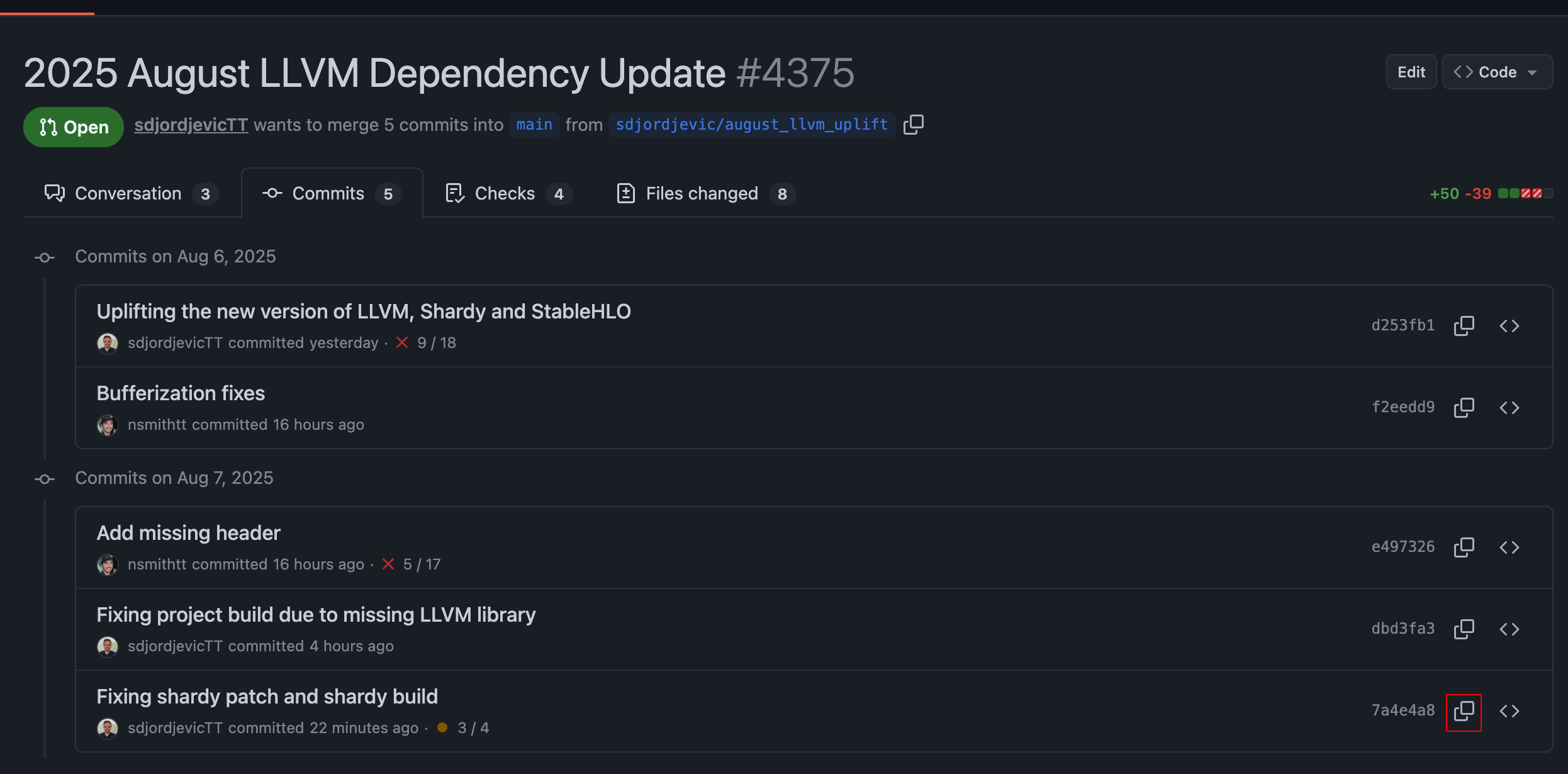

The GitHub Actions workflow Build and Publish Docker Image builds the Docker images and uploads them to GitHub Packages at https://github.com/orgs/tenstorrent/packages?repo_name=tt-mlir. We use the git SHA we build from as the tag.

Building the Docker Image Locally

To test the changes and build the image locally, use the following command:

docker build -f .github/Dockerfile.base -t ghcr.io/tenstorrent/tt-mlir/tt-mlir-base-ubuntu-22-04:latest .

docker build -f .github/Dockerfile.ci -t ghcr.io/tenstorrent/tt-mlir/tt-mlir-ci-ubuntu-22-04:latest .

docker build -f .github/Dockerfile.ird --build-arg FROM_IMAGE=base -t ghcr.io/tenstorrent/tt-mlir/tt-mlir-ird-base-ubuntu-22-04:latest .

docker build -f .github/Dockerfile.ird --build-arg FROM_IMAGE=ci -t ghcr.io/tenstorrent/tt-mlir/tt-mlir-ird-ubuntu-22-04:latest .

Using the Image in GitHub Actions Jobs

The GitHub Actions workflow Build in Docker uses a Docker container for building:

container:

image: ghcr.io/${{ github.repository }}/tt-mlir-ci-ubuntu-22-04:latest

options: --user root

Running Virtualized Ubuntu VM on macOS

In some cases, like running a software simulated device, it can be beneficial to run the stack on a local macOS machine. This document covers the necessary setup and configuration steps to get a performant Ubuntu VM setup on Apple Silicon.

Prerequisite Steps

- UTM is the VM application we'll be using in this guide, so the first step is to download and install UTM.

- Ubuntu 22.04 ARM image download.

- Direct link: 64-bit ARM (ARMv8/AArch64) server install image

UTM Setup

- Launch UTM and click the

+button to start a new VM. - Choose

Virtualize(emulation works, but is unusably slow). - Under

PreconfiguredchooseLinux. - Check box

Use Apple Virtualizationand select the ubuntu iso we just downloaded forBoot ISO Image.

- Optionally check

Enable Rosettawhich can enable running ELF's compiled for x86 if you're interested. It's not required and additional steps are required for it to work.

- This step depends on your machine's capabilities, but it's recommended to give 16GB of memory and to use the defualt CPU Cores setting. Note this can be changed after initial setup if you want to go back and tweak settings.

- It's recommended to at least 128GB of storage, with LLVM installation and full SW stack we quickly reach 80 gigs of storage.

- Optionally choose a shared host/VM directory.

- Optionally name your new VM

ubuntu 22.04 arm64

VM Setup

- Boot your newly created VM!

- Run through the Ubuntu setup as you see fit, be sure that openssh is enabled which simplifies logging into your VM, but the rest of the defaults are sufficient.

- If you plan on using your VM via ssh you can retrieve the ip address

ip aand looking at theinetrow underenp0s1. Should look something likeinet 192.168.64.3. Another tip is to add this to the host's~/.ssh/config. - Install your normal developer tools as you see fit.

Software Stack Installation

The majority of the software install flow is the same, with the exception of a few caveats called out here.

- Installing metal deps needs the additional flags below:

git clone git@github.com:tenstorrent/tt-metal.git

cd tt-metal

sudo bash install_dependencies.sh --docker --no-distributed

--docker: Despite not being in a docker, this is the flag that turns off configuring hugepages which is not required for VM.--no-distributed: Currently the metal distributed feature requires a package version of openmpi that only supports x86.

- Install tt-mlir system dependencies as outlined by this step.

- The environment needs to be built manually as outlined here.

- We can then build tt-mlir per usual.

- If planning to run tests on software sim, let's build the ttrt tool.

- The following all works per usual:

Testing

To run tests:

source env/activate

cmake --build build -- check-ttmlir

Lit testing

llvm-lit tool is used for MLIR testing. With it you can:

# Query which tests are available

llvm-lit -sv ./build/test --show-tests

# Run an individual test:

llvm-lit -sv ./build/test/ttmlir/Dialect/TTIR/test_allocate.mlir

# Run a sub-suite:

llvm-lit -sv ./build/test/ttmlir/Dialect/TTIR

See the full

llvm-litdocumentation for more information.

EmitC testing

NOTE: This is a developer's guide on how to test EmitC as a feature. For usage of EmitC, please refer to ttnn-standalone docs.

Prerequisites

-

Activated virtual environment:

source env/activate -

Saved system descriptor file:

ttrt query --save-artifacts

Table of Contents

- Generate all EmitC tests and run them

- Generate a single EmitC test and run it

- Generate EmitC tests with Builder

Generate all EmitC tests and run them

-

Generate flatbuffers and .cpp files for EmitC tests

If you don't have SYSTEM_DESC_PATH environment variable exported, you can run:

SYSTEM_DESC_PATH=/path/to/system_desc.ttsys llvm-lit -sv test/ttmlir/EmitC/TTNNOr if you have SYSTEM_DESC_PATH exported, you can omit it:

llvm-lit -sv test/ttmlir/EmitC/TTNN -

Compile generated .cpp files to shared objects

tools/ttnn-standalone/ci_compile_dylib.py -

Run flatbuffers + shared objects and compare results

ttrt run --emitc build/test/ttmlir/EmitC/TTNN

Generate EmitC tests with Builder

Builder offers support for building EmitPy modules from ttir or stablehlo ops. Refer to Builder documentation.

Generate a single EmitC test and run it

-

Generate flatbuffers and .cpp files for EmitC test

SYSTEM_DESC_PATH=/path/to/system_desc.ttsys llvm-lit -sv test/ttmlir/EmitC/TTNN/eltwise_binary/add.mlir -

Compile generated .cpp files to shared objects

Assuming default build directory path:

tools/ttnn-standalone/ci_compile_dylib.py --file build/test/ttmlir/EmitC/TTNN/eltwise_binary/add.mlir.cpp -

Run the flatbuffer + shared object and compare results

ttrt emitc build/test/ttmlir/EmitC/TTNN/eltwise_binary/add.mlir.so --flatbuffer build/test/ttmlir/EmitC/TTNN/eltwise_binary/add.mlir.ttnn

Tools

The ttmlir project currenly exposes the following tools:

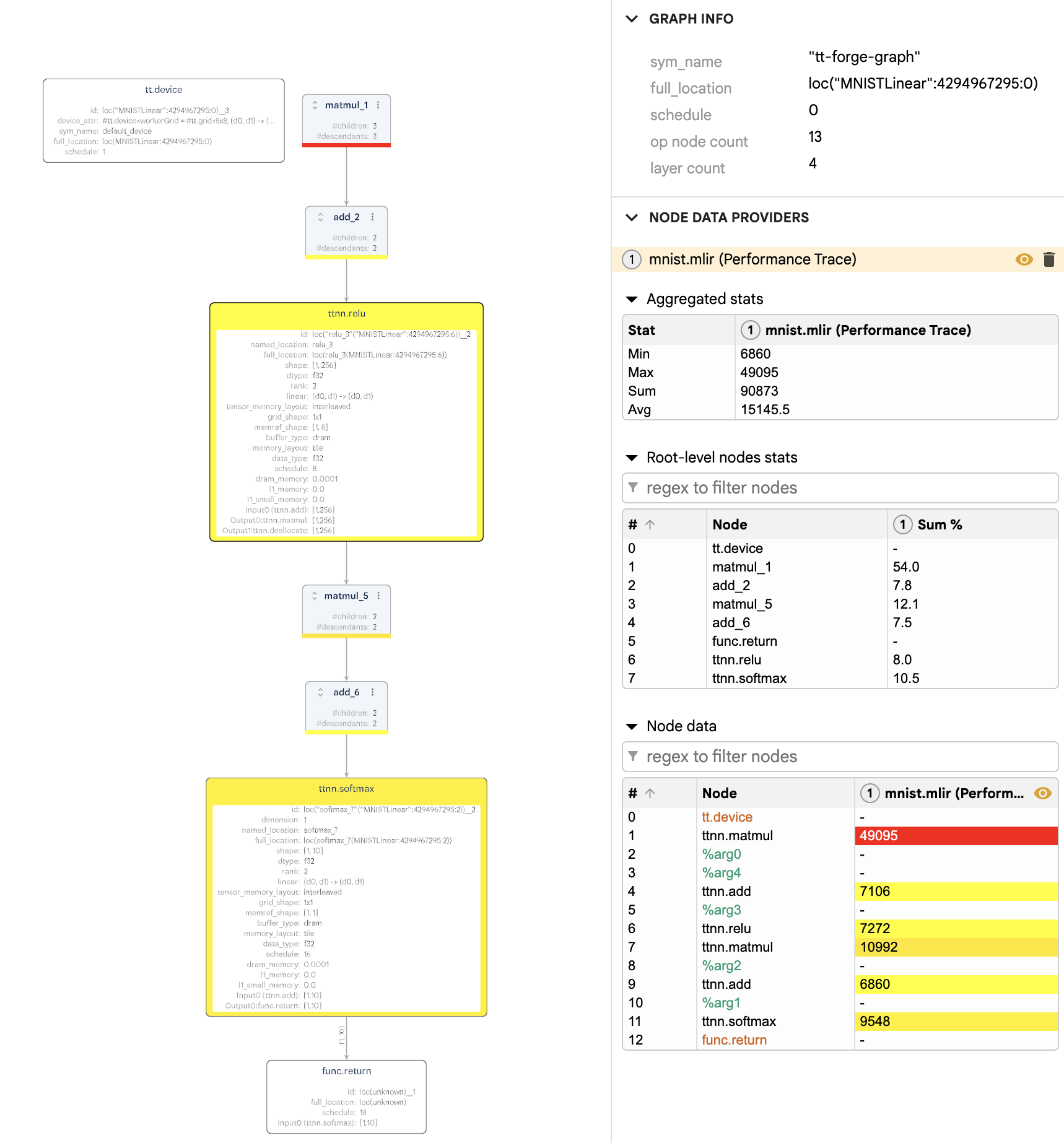

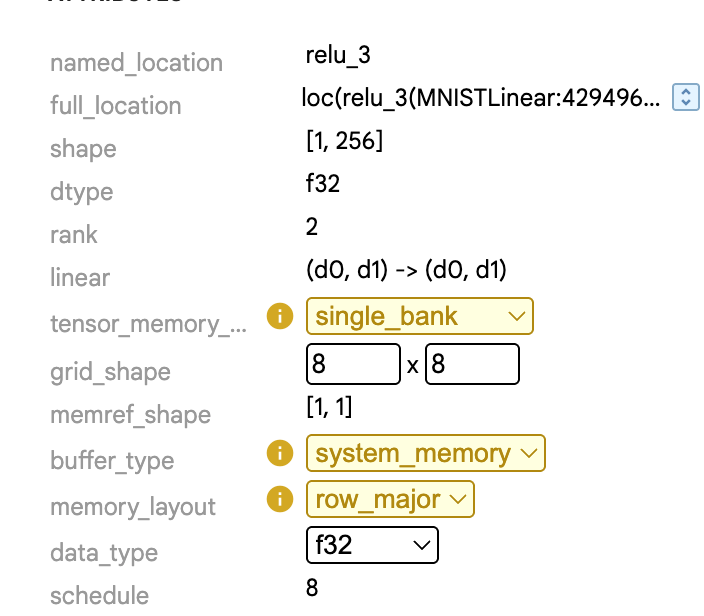

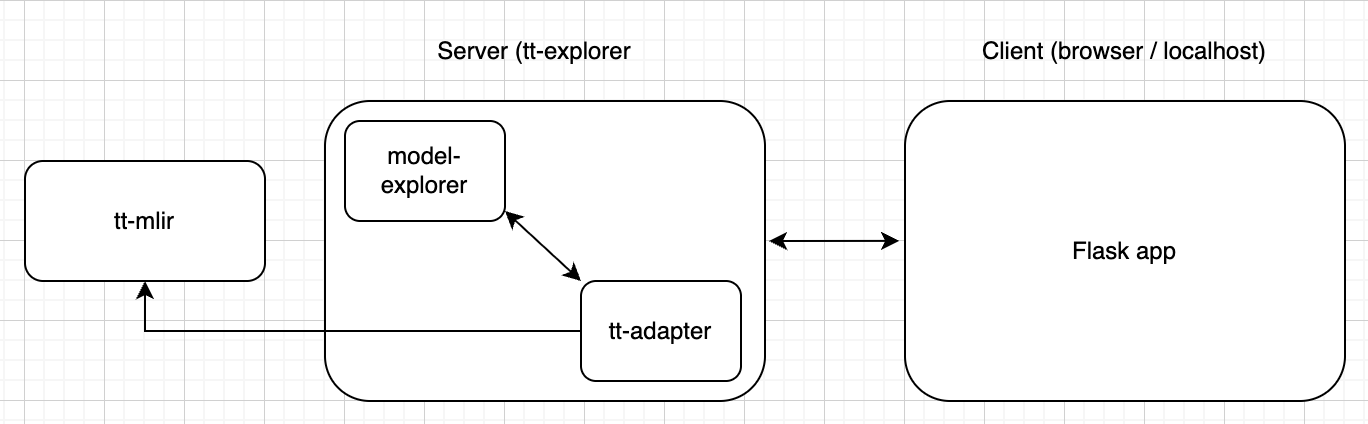

ttmlir-opt: Thettmliroptimizer driver. This tool is used to run thettmlircompiler passes on a.mlirsource files and is central to developing and testing the compiler.ttmlir-translate: Thettmlirtranslation tool. This tool can convert from IR to external representation (and inverse). For example, IR in EmitC dialect can be converted into C++ code.ttrt: This tool is intended to be a swiss army knife for working with flatbuffers generated by the compiler. Its primary role is to inspect and run flatbuffer files.ttir-builder: This tool is for creating ttir operations. It provides support for those ops to be compiled into modules or directly to flatbuffer files.tt-explorer: Visualizer tool forttmlir-powered compiler results. Visualizes from emitted.mlirfiles to display compiled model, attributes, performance results, and provide a platform for human-driven overrides to gamify model tuning.ttnn-standalone: This tool is used to run C++ TTNN code outside of the compiler environment.

ttmlir-opt

The ttmlir optimizer driver. This tool is used to run the ttmlir compiler passes on a .mlir source files and is central to developing and testing the compiler.

Simple Test

./build/bin/ttmlir-opt --ttir-to-ttnn-backend-pipeline test/ttmlir/Dialect/TTNN/simple_multiply.mlir

# Or

./build/bin/ttmlir-opt --ttir-to-ttmetal-pipeline test/ttmlir/Dialect/TTNN/simple_multiply.mlir

ttmlir-translate

The ttmlir-translate translation utility. Unlike ttmlir-opt tool which is used to run passes within the MLIR world, ttmlir-translate allows us to ingest something (e.g. code) into MLIR world, and also produce something (e.g. executable binary, or even code again) from MLIR.

Generate C++ code from MLIR

# First, let's run `ttmlir-opt` to convert to proper dialect

./build/bin/ttmlir-opt --ttir-to-emitc-pipeline test/ttmlir/Dialect/TTNN/eltwise/binary/multiply/simple_multiply.mlir -o c.mlir

# Now run `ttmlir-translate` to produce C++ code

./build/bin/ttmlir-translate --mlir-to-cpp c.mlir

Bonus: These two commands can be piped, to avoid writing a mlir file to disk, like so:

./build/bin/ttmlir-opt --ttir-to-emitc-pipeline test/ttmlir/Dialect/TTNN/eltwise/binary/multiply/simple_multiply.mlir | ./build/bin/ttmlir-translate -mlir-to-cpp

Generate flatbuffer file from MLIR

# First run `ttmlir-opt` to convert to proper dialect

./build/bin/ttmlir-opt --ttir-to-ttnn-backend-pipeline test/ttmlir/Dialect/TTNN/eltwise/binary/multiply/simple_multiply.mlir -o ttnn.mlir

# Now run `ttmlir-translate` to produce flatbuffer file

./build/bin/ttmlir-translate --ttnn-to-flatbuffer ttnn.mlir -o out.ttnn

ttrt

This tool is intended to be a swiss army knife for working with flatbuffers generated by the compiler. Its primary role is to inspect and run flatbuffer files. It enables the running of flatbuffer files without a front-end runtime.

Building

- Build ttmlir

- Build

ttrt:

source env/activate

cmake --build build

ttrt --help

Building runtime mode

Add the following flags when building the compiler

-DTTMLIR_ENABLE_RUNTIME=ON

Building perf mode

Add the following flags when building the compiler

-DTTMLIR_ENABLE_RUNTIME=ON

-DTT_RUNTIME_ENABLE_PERF_TRACE=ON

LOGGER Levels

ttrt support logging at different logger levels. You will need to set env var TTRT_LOGGER_LEVEL in command line or a python script. By default, it's set to INFO.

TTRT_LOGGER_LEVEL=INFO

TTRT_LOGGER_LEVEL=CRITICAL

TTRT_LOGGER_LEVEL=ERROR

TTRT_LOGGER_LEVEL=WARNING

TTRT_LOGGER_LEVEL=DEBUG

tt-metal logging

ttrt runtime uses tt-metal for op execution and device interfacing. For more detailed logs, which can help in troubleshooting build or runtime issues, set env var TT_METAL_LOGGER_LEVEL. By default, it is set to FATAL.

export TT_METAL_LOGGER_LEVEL=DEBUG

Installing ttrt as python whls

Every time ttrt is built, it creates a whls file in build/tools/ttrt/build. Ex filename: ttrt-0.0.235-cp312-cp312-linux_x86_64.whl. You can take this whls file and install it in any docker container and in any venv outside of ttmlir. After which, you can use all the following functionality as the same.

- Download whls

- Create a python venv

python -m venv ttrt_env

source ttrt_env/bin/activate

- Install whls (replace with your version of the whls)

pip install build/tools/ttrt/build/ttrt-0.0.235-cp312-cp312-linux_x86_64.whl

Generating a flatbuffer

tt-mlir exposes a few ways to generate flatbuffers.

Generate a flatbuffer file from ttir-builder

ttir-builder is a tool for creating TTIR ops, converting them into MLIR modules, running passes to lower modules into backends, and translating to flatbuffers. See documentation for further instructions.

Generate a flatbuffer file from compiler

The compiler supports a pass to load a system descriptor to compile against. You can feed this pass into ttmlir-opt.

- Build ttmlir

- Generate ttsys file from the system you want to compile for using

ttrt. This will create asystem_desc.ttsysfile underttrt-artifactsfolder.

ttrt query --save-artifacts

- Use

ttmlir-opttool in compiler to feed system descriptor. See thettmlir-optdocumentation for more information on how to generate .mlir files.

./build/bin/ttmlir-opt --ttcore-register-device="system-desc-path=/path/to/system_desc.ttsys" --ttir-to-ttnn-backend-pipeline test/ttmlir/Dialect/TTNN/simple_subtract.mlir -o ttnn.mlir

or (pipe path directly into ttir-to-ttnn-backend-pipeline)

./build/bin/ttmlir-opt --ttir-to-ttnn-backend-pipeline="system-desc-path=/path/to/system_desc.ttsys" test/ttmlir/Dialect/TTNN/simple_subtract_to_add.mlir -o ttnn.mlir

- Use

ttmlir-translatetool in compiler to generate the flatbuffer executable. See thettmlir-translatedocumentation for more information on how to generate flatbuffer files.

./build/bin/ttmlir-translate --ttnn-to-flatbuffer ttnn.mlir -o out.ttnn

- Run your test cases using

ttrt

ttrt run /path/to/out.ttnn

Generate flatbuffer files using llvm-lit

There are already existing .mlir test cases under test/ttmlir/Silicon. You can use llvm-lit tool to generate the corresponding ttnn and ttm files.

- Build ttmlir

- Generate ttsys file from the system you want to compile for using

ttrt. This will create asystem_desc.ttsysfile underttrt-artifactsfolder.

ttrt query --save-artifacts

- Export this file in your environment using

export SYSTEM_DESC_PATH=/path/to/system_desc.ttsys. Whenllvm-litis run, it will query this variable and generate the ttnn and ttm files using this system. Optionally, you can also provide this manually when runningllvm-lit. - Generate your test cases. This will generate all your ttnn and ttm files under

build/test/ttmlir/Silicon. ttnn files have a.ttnnfile extension and ttmetal files have a.ttmextension.

cmake --build build -- check-ttmlir

- (Optional) If you have a single .mlir file (or a directory of custom .mlir files) that you created using the compiler, and you want to generate the corresponding ttnn and ttm files for it, you can run

llvm-litstandalone to the path of your .mlir file or directory of .mlir files to generate the flatbuffer executables. You will have to make sure you add in the correctllvm-litconfigs into your .mlir file. See section on addingllvm-litconfig options inside a .mlir file to create flatbuffer binaries for more info. You must also make sure your .mlir test is found within test/ttmlir/Silicon folder (and point lit to the build folder)!

llvm-lit -v ./build/test/ttmlir/Silicon

or

SYSTEM_DESC_PATH=/path/to/system_desc.ttsys llvm-lit -v ./build/test/ttmlir/Silicon

- Run your test cases using

ttrt

ttrt run /path/to/test.ttnn

ttrt run /path/to/dir/of/flatbuffers

Adding llvm-lit config options inside a .mlir file to create flatbuffer binaries

Inside of your .mlir file, you can add certain config options that llvm-lit will use when running against that test case. For the purpose of generating flatbuffer executables, you can add --ttcore-register-device="system-desc-path=%system_desc_path%" which will tell llvm-lit to parse the system desc found from the environment flag set by export SYSTEM_DESC_PATH=/path/to/system_desc.ttsys. You can also paste a custom path to a system desc file as well.

// RUN: ttmlir-opt --ttcore-register-device="system-desc-path=%system_desc_path%" --ttnn-layout --convert-ttir-to-ttnn %s > %t.mlir

// RUN: FileCheck %s --input-file=%t.mlir

// RUN: ttmlir-translate --ttnn-to-flatbuffer %t.mlir > %t.ttnn

Adding new mlir test cases

You can copy your .mlir test file (with the appropriate llvm-lit config options for generating flatbuffer binaries) into test/ttmlir/Silicon. Then, follow generating flatbuffer files using llvm-lit to generate the executables to run!

Versioning

ttrt and flatbuffers have strict versioning check. When running a flatbuffer against ttrt, you have to make sure the flatbuffer was generated using the same version as ttrt (or vice versa). Major and Minor versions are manually set using github tags when releases are made. Patch versioning is the number of commits from the last major/minor tag.

vmajor.minor.patch

The flag --ignore-version can be used to bypass versioning checks. Use at your own risk; it can cause unpredictable errors.

Application APIs

ttrt --help

ttrt read

ttrt run

ttrt query

ttrt perf

ttrt check

ttrt emitpy

Command line usage

There are different ways you can use the APIs under ttrt. The first is via the command line as follows. All artifacts are saved under ttrt-artifacts folder under TT_MLIR_HOME environment variable. By default, all logging is printed to the terminal. You can specify a log file to dump output to.

read

Read sections of a binary file

ttrt read --help

ttrt read --section version out.ttnn

ttrt read --section system_desc out.ttnn

ttrt read --section mlir out.ttnn

ttrt read --section inputs out.ttnn

ttrt read --section outputs out.ttnn

ttrt read --section op_stats out.ttnn

ttrt read --section mesh_shape out.ttnn

ttrt read --section all out.ttnn --clean-artifacts

ttrt read --section all out.ttnn --save-artifacts

ttrt read --section all /dir/of/flatbuffers

ttrt read system_desc.ttsys

ttrt read --section system_desc system_desc.ttsys

ttrt read system_desc.ttsys --log-file ttrt.log

ttrt read out.ttnn --save-artifacts --artifact-dir /path/to/some/dir

ttrt read out.ttnn --result-file result.json

run

Run a binary file or a directory of binary files

Note: It's required to be on a system with silicon and to have a runtime enabled build -DTTMLIR_ENABLE_RUNTIME=ON.

ttrt run --help

ttrt run out.ttnn

ttrt run out.ttnn --seed 0

ttrt run out.ttnn --init arange

ttrt run out.ttnn --identity

ttrt run out.ttnn --identity --rtol 1 --atol 1

ttrt run out.ttnn --clean-artifacts

ttrt run out.ttnn --save-artifacts

ttrt run out.ttnn --loops 10

ttrt run --program-index all out.ttnn

ttrt run --program-index 0 out.ttnn

ttrt run /dir/of/flatbuffers

ttrt run /dir/of/flatbuffers --loops 10

ttrt run /dir/of/flatbuffers --log-file ttrt.log

ttrt run out.ttnn --save-artifacts --artifact-dir /path/to/some/dir

ttrt run out.ttnn --dump-kernels --kernel-source-dir /tmp

ttrt run out.ttnn --load-kernels --kernel-source-dir /tmp

ttrt run out.ttnn --result-file result.json

ttrt run out.ttnn --disable-golden

ttrt run out.ttnn --save-golden-tensors

ttrt run out.ttnn --print-input-output-tensors

ttrt run out.ttnn --debugger

ttrt run out.ttnn --memory --save-artifacts

ttrt run out.ttnn --memory --check-memory-leak

For info on running EmitC tests, see EmitC testing.

Run results

The run api saves a run_results.json file that records information about the run including any errors that were thrown and location of other saved run data.

{

[

{

"file_path": "ttnn/test_tan[f32-shape0]_ttnn.mlir.ttnn",

"result": "pass",

"exception": "",

"log_file": "ttrt.log",

"artifacts": "/home/$USER/tt-mlir/ttrt-artifacts",

"program_index": "all",

"program_results": {

"program_index_0": {

"loop_0": {

"total_duration_ns": 3269341588,

"total_ttnn_api_duration_ns": null,

"total_device_kernel_duration_ns": null

}

}

}

}

]

Golden checks

Golden checks are used to verify runtime op accuracy. They are run by default during the golden callback unless flag --disable-golden is used. If flag --save-artifacts is used, a golden results report will be saved under the artifacts directory.

{

"loc(\"/home/$USER/tt-mlir/test/python/golden/test_ttir_ops.py:74:id(0)\")": {

"expected_pcc": 0.99,

"actual_pcc": 0.0015917614829425491,

"atol": 1e-08,

"rtol": 1e-05,

"allclose": false,

"max": 8529.765625,

"mean_absolute_error": 6.644593238830566,

"root_mean_square_error": 100.30211639404297,

"cosine_similarity": 0.0016297339461743832

}

}

Memory

Memory callback functions are run when flag --memory is used. A memory report will be written under the artifacts directory that contains information on op memory usage.

{

"0": {

"loc": "loc(\"/home/$USER/tt-mlir/test/python/golden/test_ttir_ops.py:74:id(0)\")",

"debug_str": "%0 = \"ttnn.tan\"(%arg0) : (tensor<128x128xf32, #ttnn.ttnn_layout<(d0, d1) -> (d0, d1), <1x1>, memref<4x4x!ttcore.tile<32x32, f32>, #ttnn.buffer_type<dram>>, <interleaved>>>) -> tensor<128x128xf32, #ttnn.ttnn_layout<(d0, d1) -> (d0, d1), <1x1>, memref<4x4x!ttcore.tile<32x32, f32>, #ttnn.buffer_type<dram>>, <interleaved>>> loc(\"/home/$USER/tt-mlir/test/python/golden/test_ttir_ops.py:74:id(0)\")",

"dram": {

"num_banks": 12,

"total_bytes_per_bank": 1071181792,

"total_bytes_allocated_per_bank": 16384,

"total_bytes_free_per_bank": 1071167456,

"largest_contiguous_bytes_free_per_bank": 1071165408,

"block_table": [

{

"allocated": "yes",

"nextID": "1",

"prevID": "-1",

"size": "8192",

"address": "0",

"blockID": "0"

},

{

"allocated": "yes",

"nextID": "3",

"prevID": "0",

"size": "8192",

"address": "8192",

"blockID": "1"

},

{

"allocated": "no",

"nextID": "-1",

"prevID": "1",

"size": "1071165408",

"address": "16384",

"blockID": "3"

}

]

},

"l1": {

"num_banks": 64,

"total_bytes_per_bank": 1369120,

"total_bytes_allocated_per_bank": 0,

"total_bytes_free_per_bank": 1369120,

"largest_contiguous_bytes_free_per_bank": 1369120,

"block_table": [

{

"allocated": "no",

"nextID": "-1",

"prevID": "-1",

"size": "1369120",

"address": "0",

"blockID": "0"

}

]

},

"l1_small": {

"num_banks": 64,

"total_bytes_per_bank": 32768,

"total_bytes_allocated_per_bank": 0,

"total_bytes_free_per_bank": 32768,

"largest_contiguous_bytes_free_per_bank": 32768,

"block_table": [

{

"allocated": "no",

"nextID": "-1",

"prevID": "-1",

"size": "32768",

"address": "0",

"blockID": "0"

}

]

},

"trace": {

"num_banks": 12,

"total_bytes_per_bank": 0,

"total_bytes_allocated_per_bank": 0,

"total_bytes_free_per_bank": 0,

"largest_contiguous_bytes_free_per_bank": 0,

"block_table": [

{

"allocated": "no",

"nextID": "-1",

"prevID": "-1",

"size": "0",

"address": "0",

"blockID": "0"

}

]

}

}

}

Debugger

Enabling the --debugger flag sets a pbd trace to run after each op during the callback hook.

query

Query the system to obtain the system desc file (optionally store it to disk)

Note: It's required to be on a system with silicon and to have a runtime enabled build -DTTMLIR_ENABLE_RUNTIME=ON.

ttrt query --help

ttrt query

ttrt query --quiet

ttrt query --save-artifacts

ttrt query --clean-artifacts

ttrt query --save-artifacts --log-file ttrt.log

ttrt query --save-artifacts --artifact-dir /path/to/some/dir

ttrt query --result-file result.json

perf

Run performance mode of a binary file or a directory of binary files

Note: It's required to be on a system with silicon and to have a runtime enabled build -DTTMLIR_ENABLE_RUNTIME=ON. Also need perf enabled build -DTT_RUNTIME_ENABLE_PERF_TRACE=ON.

Note: You can collect host only related performance data via --host-only flag. By default, host and device side performance data are both collected.

If the saving artifacts flag is provided, perf mode will dump the following files in the artifacts directory

ops_perf_results.csv : compiled op performance results

OP CODE,OP TYPE,GLOBAL CALL COUNT,DEVICE ID,ATTRIBUTES,MATH FIDELITY,CORE COUNT,PARALLELIZATION STRATEGY,HOST START TS,HOST END TS,HOST DURATION [ns],DEVICE FW START CYCLE,DEVICE FW END CYCLE,OP TO OP LATENCY [ns],OP TO OP LATENCY BR/NRISC START [ns],DEVICE FW DURATION [ns],DEVICE KERNEL DURATION [ns],DEVICE KERNEL DURATION DM START [ns],DEVICE KERNEL DURATION PER CORE MIN [ns],DEVICE KERNEL DURATION PER CORE MAX [ns],DEVICE KERNEL DURATION PER CORE AVG [ns],DEVICE KERNEL FIRST TO LAST START [ns],DEVICE BRISC KERNEL DURATION [ns],DEVICE NCRISC KERNEL DURATION [ns],DEVICE TRISC0 KERNEL DURATION [ns],DEVICE TRISC1 KERNEL DURATION [ns],DEVICE TRISC2 KERNEL DURATION [ns],DEVICE ERISC KERNEL DURATION [ns],DEVICE COMPUTE CB WAIT FRONT [ns],DEVICE COMPUTE CB RESERVE BACK [ns],DISPATCH TOTAL CQ CMD OP TIME [ns],DISPATCH GO SEND WAIT TIME [ns],INPUT_0_W,INPUT_0_Z,INPUT_0_Y,INPUT_0_X,INPUT_0_LAYOUT,INPUT_0_DATATYPE,INPUT_0_MEMORY,OUTPUT_0_W,OUTPUT_0_Z,OUTPUT_0_Y,OUTPUT_0_X,OUTPUT_0_LAYOUT,OUTPUT_0_DATATYPE,OUTPUT_0_MEMORY,METAL TRACE ID,METAL TRACE REPLAY SESSION ID,COMPUTE KERNEL SOURCE,COMPUTE KERNEL HASH,DATA MOVEMENT KERNEL SOURCE,DATA MOVEMENT KERNEL HASH,BRISC MAX KERNEL SIZE [B],NCRISC MAX KERNEL SIZE [B],TRISC 0 MAX KERNEL SIZE [B],TRISC 1 MAX KERNEL SIZE [B],TRISC 2 MAX KERNEL SIZE [B],ERISC MAX KERNEL SIZE [B],PM IDEAL [ns],PM COMPUTE [ns],PM BANDWIDTH [ns],PM REQ I BW,PM REQ O BW,PM FPU UTIL (%),NOC UTIL (%),DRAM BW UTIL (%),NPE CONG IMPACT (%),LOC,CONST_EVAL_OP,PROGRAM_METADATA

UnaryDeviceOperation,tt_dnn_device,1024,0,{'bfp8_pack_precise': 'false'; 'fp32_dest_acc_en': 'true'; 'op_chain': '{UnaryWithParam(op_type=UnaryOpType::TAN;param={})}'; 'output_dtype': 'DataType::FLOAT32'; 'output_memory_config': 'MemoryConfig(memory_layout=TensorMemoryLayout::INTERLEAVED;buffer_type=BufferType::DRAM;shard_spec=std::nullopt;nd_shard_spec=std::nullopt;created_with_nd_shard_spec=0)'; 'preserve_fp32_precision': 'true'},HiFi4,16,,4556959654,4557518500,558846,9815181939513,9815181946491,0,0,6978,6314,6126,4982,6216,5652,335,6087,1375,1656,4957,465,,,,,,1,1,128,128,TILE,FLOAT32,DEV_1_DRAM_INTERLEAVED,1,1,128,128,TILE,FLOAT32,DEV_1_DRAM_INTERLEAVED,,,['ttnn/cpp/ttnn/operations/eltwise/unary/device/kernels/compute//eltwise_sfpu.cpp'],['eltwise_sfpu/3265258334475852953/'],['ttnn/cpp/ttnn/operations/eltwise/unary/device/kernels/dataflow/reader_unary_interleaved_start_id.cpp'; 'ttnn/cpp/ttnn/operations/eltwise/unary/device/kernels/dataflow/writer_unary_interleaved_start_id.cpp'],['reader_unary_interleaved_start_id/1146610629329498539/'; 'writer_unary_interleaved_start_id/1727642094059197364/'],708,736,1344,1568,1380,0,1,1,1,[],[],0.016,,,,"loc(""/home/$USER/tt-mlir/test/python/golden/test_ttir_ops.py:74:id(0)"")",false,"{'loop_number': 0, 'program_index': 0, 'disable_eth_dispatch': False, 'enable_program_cache': False, 'dump_device_rate': 1000}"

profile_log_device.csv : dump of all device side profiled results

tracy_ops_data.csv : op data results dumped in a readable format

tracy_ops_times.csv : op time results dumped in a readable format

tracy_profile_log_host.tracy : tracy profiled results file, this file can be fed into the tracy GUI

check

Check a binary file or a directory of binary files against a system desc (by default, uses the host machine)

Note: It's required to be on a system with silicon and to have a runtime enabled build -DTTMLIR_ENABLE_RUNTIME=ON.

ttrt check --help

ttrt check out.ttnn

ttrt check out.ttnn --system-desc /path/to/system_desc.ttsys

ttrt check out.ttnn --clean-artifacts

ttrt check out.ttnn --save-artifacts

ttrt check out.ttnn --log-file ttrt.log

ttrt check /dir/of/flatbuffers --system-desc /dir/of/system_desc

ttrt check --save-artifacts --artifact-dir /path/to/some/dir out.ttnn

ttrt check out.ttnn --result-file result.json

emitpy

Run a python file or a directory of python files. Optionally provide a binary file or directory of binary files for output tensor comparison.

Note: It's required to be on a system with silicon and to have a runtime enabled build -DTTMLIR_ENABLE_RUNTIME=ON.

ttrt emitpy --help

ttrt emitpy out.py

ttrt emitpy out.py --clean-artifacts

ttrt emitpy out.py --save-artifacts

ttrt emitpy out.py --loops 10

ttrt emitpy --program-index all out.py

ttrt emitpy --program-index 0 out.py

ttrt emitpy /dir/of/emitpy_modules

ttrt emitpy /dir/of/emitpy_modules --loops 10

ttrt emitpy /dir/of/emitpy_modules --log-file ttrt.log

ttrt emitpy /dir/of/emitpy_modules --flatbuffer /path/to/flatbuffer

ttrt emitpy out.py --save-artifacts --artifact-dir /path/to/some/dir

ttrt emitpy out.py --result-file result.json

ttrt emitpy out.py --print-input-output-tensors

ttrt emitpy out.py --memory --save-artifacts

For info on generating EmitPy tests through ttmlir-opt and ttmlir-translate, see EmitPy.

For info on generating EmitPy tests through ttir-builder, see ttir-builder.

emitpy results

The emitpy api saves a emitpy_results.json file that records information about the run including any errors that were thrown and location of other saved data.

[

{

"file_path": "ttir-builder-artifacts/emitpy/test_binary_ops[add-emitpy-f32-128x128]_ttnn.mlir.py",

"result": "pass",

"exception": "",

"log_file": "ttrt.log",

"artifacts": "/home/$USER/tt-mlir/ttrt-artifacts",

"program_index": "all"

}

]

emitc

Run a .so file or a directory of .so files. Optionally provide a binary file or directory of binary files for output tensor comparison.

Note: It's required to be on a system with silicon and to have a runtime enabled build -DTTMLIR_ENABLE_RUNTIME=ON.

ttrt emitc --help

ttrt emitc out.py

ttrt emitc out.py --clean-artifacts

ttrt emitc out.py --save-artifacts

ttrt emitc out.py --loops 10

ttrt emitc --program-index all out.py

ttrt emitc --program-index 0 out.py

ttrt emitc /dir/of/emitc_modules

ttrt emitc /dir/of/emitc_modules --loops 10

ttrt emitc /dir/of/emitc_modules --log-file ttrt.log

ttrt emitc /dir/of/emitc_modules --flatbuffer /path/to/flatbuffer

ttrt emitc out.py --save-artifacts --artifact-dir /path/to/some/dir

ttrt emitc out.py --result-file result.json

ttrt emitc out.py --print-input-output-tensors

ttrt emitc out.py --memory --save-artifacts

For info on generating EmitC tests through ttnn-standalone, see EmitC testing documentation.

For info on generating EmitC tests through ttir-builder, see ttir-builder documentation.

emitc results

The emitc api saves a emitc_results.json file that records information about the run including any errors that were thrown and location of other saved data.

[

{

"file_path": "ttir-builder-artifacts/emitc/test_reciprocal[emitc-f32-128x128]_ttnn.mlir.so",

"result": "pass",

"exception": "",

"log_file": "ttrt.log",

"artifacts": "/home/$USER/tt-mlir/ttrt-artifacts",

"program_index": "all"

}

]

gdb

You can relaunch ttrt inside of gdb which can be useful for debugging C++

runtime components.

ttrt --gdb run ...

ttrt --gdb perf ...

Using as a python package

The other way to use the APIs under ttrt is importing it as a library. This allows the user to use it in custom scripts.

Import ttrt as a python package

from ttrt.common.api import API

Setup API and register all features

API.initialize_apis()

Setup arguments

You can specify certain arguments to pass to each API, or use the default arguments provided

Args

This can be a dictionary of values to set inside your API instance. These are the same options as found via the command line. You can get the total list of support arguments via the ttrt --help command. Any argument not provided will be set to the default.

custom_args = {}

custom_args["--clean-artifacts"] = True

query_instance = API.Query(args=custom_args)

Logging

You can specify a specific logging module you want to set inside your API instance. The rationale behind this is to support different instances of different APIs, all being able to be logged to a different file. You can also customize the level of detail your log file contains.

from ttrt.common.util import Logger

import os

os.environ["LOGGER_LEVEL"] = "DEBUG"

log_file_name = "some_file_name.log"

custom_logger = Logger(log_file_name)

read_instance = API.Read(logger=custom_logger)

Artifacts

You can specify a specific artifacts directory to store all the generate metadata during the execution of any API run. This allows you to specify different artifact directories if you wish for different instances of APIs.

from ttrt.common.util import Artifacts

log_file_name = "some_file_name.log"

artifacts_folder_path = "/opt/folder"

custom_logger = Logger(log_file_name)

custom_artifacts = Artifacts(logger=custom_logger, artifacts_folder_path=artifacts_folder_path)

run_instance = API.Run(artifacts=custom_artifacts)

Execute API

Once all the arguments are setup, you can run your API instance with all your provided arguments. Note, APIs are stateless. Thus, subsequent calls to the same API instance will not preserve previous call artifacts. You can generate a new artifacts directory for subsequent runs if you wish to call the APIs multiple times, for example.

result_code, results = query_instance()

result_code, results = read_instance()

result_code, results = run_instance()

Putting it all together

You can do interesting stuff when combining all the above features into your python script

from ttrt.common.api import API

from ttrt.common.util import Logger

from ttrt.common.util import Artifacts

API.initialize_apis()

custom_args = {}

custom_args["--clean-artifacts"] = True

custom_args["--save-artifacts"] = True

custom_args["--loops"] = 10

custom_args["--init"] = "randn"

custom_args["binary"] = "/path/to/subtract.ttnn"

log_file_name = "some_file_name.log"

custom_logger = Logger(log_file_name)

artifacts_folder_path = "/opt/folder"

custom_artifacts = Artifacts(logger=custom_logger, artifacts_folder_path=artifacts_folder_path)

run_instance = API.Run(args=custom_args, logger=custom_logger, artifacts=custom_artifacts)

result_code, results = run_instance()

Runtime integration

The full set of ttrt.runtime exposed APIs and types can be found in runtime/python/runtime/runtime.cpp, however only the ones intended to be used for runtime customization through callback hooks are outlined here.

Callback hooks

MLIR Runtime exposes a feature to register python callback functions. Any two python fuctions can be provided - the first function will be executed before every op in MLIR Runtime, the second after every op. The following steps describe how to extend your application to register python functions. Callback functions are already implemented by default for pbd debugger implementation and gathering memory and golden check data as outlined in the run API section.

- Pybind DebugHooks C++ class, specifically

tt::runtime::debug::Hooks::get. Seeruntime/python/runtime/runtime.cppfor an example of howttrtpybinds it.

tt::runtime::debug::Hooks

tt::runtime::debug::Hooks::get

- Register callback functions in your python script. The following is registering the two callback functions written in

tools/ttrt/common/callback.py. The Debug Hooks get function has been pybinded tottrt.runtime.DebugHooks.get

import ttrt.runtime

callback_env = ttrt.runtime.DebugHooks.get(pre_op_callback_runtime_config, post_op_callback_runtime_config)

- The callback function has a particular function signature, which looks like the following

def pre_op_callback_runtime_config(binary, program_context, op_context):

binary: reference to the binary you are currently running, ttrt.binary Binary object

program_context: reference to the program currently running, ttrt.runtime ProgramContext object

op_context: reference to the op that is currently running, ttrt.runtime OpContext object

- Each of these parameters has certain runtime APIs exposed which can only be called within the callback functions since they rely on the

op_contextvariable that is only available from runtime during callbacks.

import ttrt.runtime

loc = ttrt.runtime.get_op_loc_info(op_context) : get the location of the op as a string which is used as the key when indexing the golden tensors stored in the flatbuffer

op_debug_str = ttrt.runtime.get_op_debug_str(op_context) : get the op debug str (contains op metadata inculding op type, attributes, input tensor shapes and dtypes, memref with layout and buffer type, and loc)

op_golden_tensor = ttrt.runtime.get_debug_info_golden(binary, loc) : get the golden tensor from the binary as a ttrt.binary GoldenTensor object

op_output_tensor = ttrt.runtime.get_op_output_tensor(op_context, program_context) : get the currently running output tensor from device as a ttrt.runtime Tensor object, if this is called in a preOp function or the op doesn't output a tensor, an empty tensor will be returned.

Note: ttrt is not needed to implement this callback feature. It aims to provide an example of how this callback feature can be implemented for golden application.

FAQ

Flatbuffer version does not match ttrt version!

ttrt and flatbuffer have strict versioning that is checked during ttrt execution. You will have to generate a flatbuffer using the same version of ttrt (or vice versa). This mean you might have to build on the same branch on which the flatbuffer was generated or regenerate the flatbuffer using your current build.

System desc does not match flatbuffer!

Flatbuffers are compiled using a specific system desc (or default values if no system desc is provided). During runtime, the flatbuffer system desc is checked against the current system to ensure the system being run on supports the flatbuffer that was compiled. If you get this error, you will have to regenerate the flatbuffer using the system you want to run on. See generate a flatbuffer file from compiler section on how to do this.

I just want to test and push my commit! What do I do!

Follow these steps (on n150, n300, and llmbox)

- Build ttmlir (sample instructions - subject to change)

source env/activate

cmake -G Ninja -B build -DCMAKE_BUILD_TYPE=Release -DCMAKE_C_COMPILER=clang-17 -DCMAKE_CXX_COMPILER=clang++-17 -DCMAKE_CXX_COMPILER_LAUNCHER=ccache -DTTMLIR_ENABLE_RUNTIME=ON -DTT_RUNTIME_ENABLE_PERF_TRACE=ON

cmake --build build

- Query system

ttrt query --save-artifacts

- Export system desc file

export SYSTEM_DESC_PATH=/path/to/system_desc.ttsys (path dumped in previous command)

- Generate test cases

cmake --build build -- check-ttmlir

- Run test cases

ttrt run build/test/ttmlir/Silicon

- (Optional) Run perf test cases

ttrt perf build/test/ttmlir/Silicon

TTRT yields an ambiguous segmentation fault!

The ttrt toolchain has specific behaviors and requirements that can lead to build and runtime issues, particularly when dealing with version mismatches or out-of-sync dependencies.

Version Mismatch Due to Local Commits

The ttrt toolchain verifies whether the current system configuration matches the model’s compilation environment. This verification involves tracking the number of commits since the last synchronization. When local commits are made in your branch, it may trigger a version mismatch between the compiled model and the current environment. This mismatch may not be handled properly by the runtime (rt), leading to potential issues.

To resolve issues stemming from these synchronization problems, follow this workflow:

- Incremental build

# make some changes

# commit

cmake --build build

# note you need to generate system_desc and flatbuffer again once you do this

This incremental build should be sufficient. If it does not resolve the error, please file an issue and proceed with the following steps for now.

- Clear the existing build and dependencies:

rm -rf build third_party/tt-metal

This ensures that all previous build artifacts and dependencies are removed, preventing conflicts or stale files from affecting the new build.

-

Rebuild from scratch: After clearing the build directories, rebuild the project from the ground up. This ensures that the build process incorporates all the necessary components without any remnants of previous builds. Build Instructions

-

Switch build configurations: If switching from a Debug to a Release build (or vice versa), ensure that you clean the build environment before transitioning. This avoids inconsistencies between build configurations and potential issues with optimization levels or debugging symbols.

-

Re-acquire the IRD: By relinquishing and re-acquiring the IRD, you ensure that the correct toolchain is used for the new build. This step ensures synchronization between the model and the toolchain.

-

Enable Debug Logging for tt-metal: To gain more insight into potential issues, enable debugging by setting the TT_METAL_LOGGER_LEVEL to DEBUG. This will provide detailed logs, which can help in troubleshooting build or runtime issues.

export TT_METAL_LOGGER_LEVEL=DEBUG

ttir-builder

ttir-builder is a tool for creating TTIR operations. It provides support for MLIR modules to be generated from user-constructed ops, lowered into TTNN or TTMetal backends, and finally translated into executable flatbuffers. Or you can do all three at once!

Building

- Build tt-mlir

- Build

ttrt - Generate ttsys file from the system you want to compile for using

ttrt. This will create attrt-artifactsfolder containing asystem_desc.ttsysfile.

ttrt query --save-artifacts

- Export this file in your environment using

export SYSTEM_DESC_PATH=/path/to/system_desc.ttsys.builder.base.builder_utilsuses thesystem_desc.ttsysfile as it runs a pass over an MLIR module to the TTNN or TTMetal backend.

Getting started

TTIRBuilder is a builder class providing the API for creating TTIR ops. The python package builder contains everything needed to create ops through a TTIRBuilder object. builder.base.builder_utils contains the APIs for wrapping op-creating-functions into MLIR modules and flatbuffers files.

from builder.ttir.ttir_builder import TTIRBuilder

from builder.base.builder_utils import compile_ttir_to_flatbuffer

Creating a TTIR module

build_ttir_module defines an MLIR module specified as a python function. It wraps fn in a MLIR FuncOp then wraps that in an MLIR module, and finally ties arguments of that FuncOp to test function inputs. It will instantiate and pass a TTIRBuilder object as the last argument of fn. Each op returns an OpView type which is a type of Operand that can be passed into another builder op as an input.

def build_ttir_module(

fn: Callable,

inputs_shapes: List[Shape],

inputs_types: Optional[List[Union[torch.dtype, TypeInfo]]] = None,

mesh_name: str = "mesh",

mesh_dict: OrderedDict[str, int] = OrderedDict([("x", 1), ("y", 1)]),

module_dump: bool = False,

base: Optional[str] = None,

output_root: str = ".",

) -> Tuple[Module, TTIRBuilder]:

Example

from builder.base.builder import Operand

from builder.ttir.ttir_builder import TTIRBuilder

from builder.base.builder_utils import build_ttir_module

shapes = [(32, 32), (32, 32), (32, 32)]

def model(in0: Operand, in1: Operand, in2: Operand, builder: TTIRBuilder):

add_0 = builder.add(in0, in1)

multiply_1 = builder.multiply(in1, add_0)

return builder.multiply(multiply_1, in2)

module, builder = build_ttir_module(model, shapes)

Returns

An MLIR module containing an MLIR op graph defined by fn and the TTIRBuilder object used to create it

module {

func.func @model(%arg0: tensor<32x32xf32>, %arg1: tensor<32x32xf32>, %arg2: tensor<32x32xf32>) -> tensor<32x32xf32> {

%0 = ttir.empty() : tensor<32x32xf32>

%1 = "ttir.add"(%arg0, %arg1, %0) : (tensor<32x32xf32>, tensor<32x32xf32>, tensor<32x32xf32>) -> tensor<32x32xf32>

%2 = ttir.empty() : tensor<32x32xf32>

%3 = "ttir.multiply"(%arg1, %1, %2) : (tensor<32x32xf32>, tensor<32x32xf32>, tensor<32x32xf32>) -> tensor<32x32xf32>

%4 = ttir.empty() : tensor<32x32xf32>

%5 = "ttir.multiply"(%3, %arg2, %4) : (tensor<32x32xf32>, tensor<32x32xf32>, tensor<32x32xf32>) -> tensor<32x32xf32>

return %5 : tensor<32x32xf32>

}

}

Running a pipeline

run_ttir_pipeline runs a pass on the TTIR module to lower it into a backend, using pipeline_fn. You can pass pipeline_fn in as one of the following: ttir_to_ttnn_backend_pipeline, ttir_to_ttmetal_backend_pipeline (both found in ttmlir.passes), or a custom pipeline built with create_custom_pipeline_fn. The default if none is provided is the TTNN pipeline.

def run_ttir_pipeline(

module,

pipeline_fn: Callable,

pipeline_options: List[str] = [],

dump_to_file: bool = True,

output_file_name: str = "test.mlir",

system_desc_path: Optional[str] = None,

mesh_dict: OrderedDict[str, int] = None,

argument_types_string: Optional[str] = None,

)

TTNN example

Let's expand on our previous example

from ttmlir.passes import ttir_to_ttnn_backend_pipeline

from builder.base.builder import Operand

from builder.ttir.ttir_builder import TTIRBuilder

from builder.base.builder_utils import build_ttir_module, run_ttir_pipeline

shapes = [(32, 32), (32, 32), (32, 32)]

def model(in0: Operand, in1: Operand, in2: Operand, builder: TTIRBuilder):

add_0 = builder.add(in0, in1)

multiply_1 = builder.multiply(in1, add_0)

return builder.multiply(multiply_1, in2)

module, builder = build_ttir_module(model, shapes)

ttnn_module = run_ttir_pipeline(module, ttir_to_ttnn_backend_pipeline)

Returns

An MLIR module lowered into TTNN

#dram = #ttnn.buffer_type<dram>